The video above, The Imawik commercial, is a collaboration between In The Can Productions and Paige Saez for Makerlab

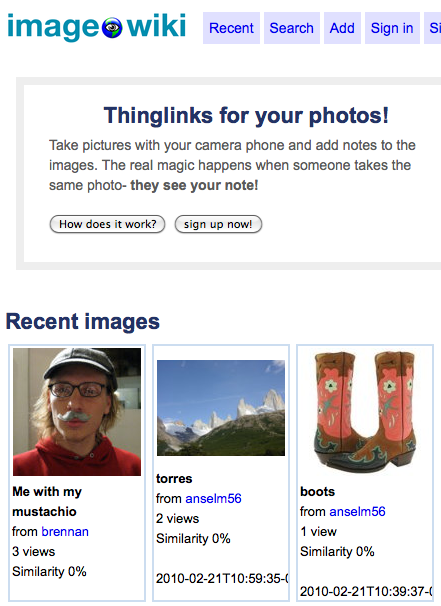

“The Imawik (ImageWiki) is a visual search tool for mobile devices. It allows for the ability to turn images into physical hyperlinks, conflating visual culture with a community-editable universal namespace for images.”

Paige Saez is an artist, designer and researcher. In 2007 she founded Makerlab with Anselm Hook, an arts and technology incubator focused on civic and environmental projects.

Paige and Anselm (see my interview with Anselm Hook here, Visual Search, Augmented Reality and a Social Commons for the Physical World Platform: Interview with Anselm Hook) have been asking a very important question:

“Who Will Own Our Augmented Future?”

But most importantly, they have been actually developing applications (again see my interview with Anselm for more background on this), to allow people to play with, hack and explore and create with the physical world platform, and to imagine new possibilities for physical hyperlinking and augmented realities. This is pretty important stuff, and kudos to Paige and Anselm for beginning this work before the big players – Google Goggles, Point and Find, and SnapTell came hurtling into the field of visual search and physical hyperlinking – see this demonstration of translation and optical character recognition in Google Goggle’s. Also check out Jamey Graham’s (Ricoh Research) Ignite presentation at Tools of Change, 2010 – Visual Search: Connecting Newspapers, Magazines and Books to Digital Information without Barcodes, for more see ricohinnovations.com/betalabs/visualsearch.

We are only just beginning to get a glimpse of how contested the social commons of the physical world platform is going to be – see the Yelp controversy.

As Paige points out:

“The lens that you are actually looking through was as important as what you were looking at. And democratizing that lens became the most important thing that we could possibly do.”

I am in total agreement. One reason I have so much enthusiasm for ARWave (note: if you are interested in following the developer conversations there are several public Waves) is I see this open framework playing an important role in the democratization of our augmented views, by creating an open, distributed, and universally accessible platform for augmented reality that will allow the creation of augmented reality content and games to be as simple as making an html page, or contributing to a wiki.

Federation, real time collaboration, linked data – ARBlips that contain metadata that is usable for semantic searches, and modified wave servers that can listen to and respond to SPARQL HTTP requests properly (see Jason Kolb’s many interesting posts on XMPP and Wave). These are just some of the reasons why ARWave could revolutionize augmented reality searches and more! (see my presentation at MoMo13 – video here)

For more on real time social augmented experiences see our panel, The Next Wave of AR: Exploring Social Augmented Experiences at Where2.0 2010, and don’t miss the Where2.0 conference which has been the crucible for the emergence of location technologies.

Augmented realities, proximity- based social networks, mapping & location aware technologies, sensors everywhere, linked data, and human psychology are on a collision course in what Jesse Schell calls the “Gamepocalypse”  See Jesse Schell’s Dice 2010 talk here, and check out his Gamepocalypse Now blog. As Bruce Sterling’s notes in his post here:

*Another precious half hour out of your life. However: if you’re into interaction design, ubiquity, social networking, and trendspotting, in the gaming biz or out of it, you’re gonna wanna do yourself a favor and listen to this.

And don’t forget to register now for Augmented Reality Event (ARE2010 in 2-3 June, 2010 – Santa Clara, CA).

Bruce Sterling, Will Wright, and Jesse Schell will be keynoting, and there is a totally awesome line up of AR innovators and industry leaders, including Paige and Anselm!

And:

You are in luck!

Here is a discount code for the first 100 folks to register to the event (before the end of March). Go to the registration page, type in code AR245 and “you’ll be asked to pay only $245 for 2 full days of AR goodness.”

“Watching AR prophet Bruce Sterling, and gaming legend Will Wright, visionary game designer Jesse Schell deliver keynotes for this price – is a magnificent steal. And on top, participating in more than 30 talks by AR industry leaders will turn these $254 into your best investment of the year,” as Ori put is so well on Games Alfresco!

If you want a preview of just how exciting it is to be involved in augmented reality right now check out Ori Inbar’s great round up on our latest monthly Augmented Reality Meetup NY (or as, Ori notes, we fondly like to call it ARNY.) There is lots of video up now (much thanks to Chris Grayson, who live streamed it). Augmented Reality Magician, Marco Tempest, is an absolutely must see. (developers note this is an awesome use of open Frameworks and OpenCV).  The video of the show includes a rare explanation of how it all works – see here.

Talking with Paige Saez – “Software is candy now!”

Tish Shute: What interests me about ImageWiki is that you have thought about physical hyperlinking beyond the obvious of where to get your next good hamburger and beer, right?

Paige Saez: Right. It was interesting for me in just thinking about the two things. How do you design a tool to work in a way that people are getting value from it? And also, how do you make it work in a way where people can explore and hack it? I think the most interesting technologies, and this is probably something somebody else said sometime, are the ones that disappear, that we don’t see, instead we see through. They become just the intermediaries. They don’t interfere with what we are trying to do.

It’s a struggle whenever you are developing a new way for people to get information or make something happen, because you are playing with magic a little bit. And you have to make it vanish the way a good magic trick makes an experience a magical one. But at the same time you also need to reveal just enough that you let people in and they can see how to change it and make it their own. That is the interesting tension for this space right now, the idea of augmented reality begins to lead the idea of a social commons for physical things. The Imagewiki project was a locus of just this tension. Tish you and I have previously discussed how difficult it was to even get people to understand the two concepts independently.

Tish Shute: Right, until recently most people hadn’t even heard the term augmented reality and I am not sure that a particularly high percentage of people would recognize it now despite the recent interest in smart phone apps.

Paige Saez: It’s very difficult to get people to understand the two concepts, and now you are adding in the third level of participation as well. So I don’t think it is impossible, but I do think it requires narrative. It is interesting that you were talking about the stories you heard this morning from the creatives at the event [Tish mentioned David Curcurito, Creative Director, Esquire gave an excellent presentation at Sobel Media event NYC] because it’s narrative and the attention to telling a story that help you walk through all of the ways you can understand how completely expansive this area is right now.

So I think we have to play with it, play with the space and the tools. I think we need to have an idea of what we want people to use the tool for, and we need to not only introduce them to the tool and the technology, but also introduce them to the concepts as well. So I see it as a three part process.

I’m really excited to be there with people, helping them do that. I think we need to do this face to face. I don’t think this can be only through a social network. The ImageWiki website is like one quarter of the entire picture, you know? The website is the resource center and the place where you can see people adding images, but what value is it to you to see an added image? It is more valuable for you to be interacting with the image or interacting with the object in the real world.

Designing for the experience of using the ImageWiki got very complicated very fast. I was trying to figure out the main thrust of the design for the UI for the ImageWiki and at a certain point I had to take a step back and say “Okay, this has to be good enough for now because we can lay it out and prototype as long as we want on the Web or mobile UI. What we need to be doing is going outside and actually aggregating and putting images into the database in order to see what exactly happens when we are adding.â€Â It’s not just like you are taking a picture of something and adding it to Flickr. Using the tool is very context specific and the information is context specific, and you can’t necessarily make that all happen at the exact same time. I think these are really fascinating spaces to be struggling in and I’m so glad to be working in this space.

Images by Chris Blow of unthinkingly.com

Tish Shute: Could you explain why we need ImageWiki? I mean I think I have ideas on this, but perhaps you can explain to me from you point of view why we need an ImageWiki, as opposed, to say, extending the image space of Wikimedia or something added on to Flickr. I mean maybe something leveraging the geotagged photos sets and APIs we already have?

Paige Saez: Yes, definitely. It’s a really good question, I mean it really is. Like, do you need an entirely new place to be holding images outside of the places that we are already holding images? That’s a huge question; enormous. Especially when you take a look at the problems around that. Its’ exhausting for an end user. Who the heck wants to go and reload everything into yet another place, right?

Tish Shute: Right.

Paige Saez: Moreover, who is going to really bother? Another problem would be what happens to the existing datasets that people have already committed to? And then of course there is the problem of authority and explanations why….Gaining interest and authority in a space when nobody even understands why that space should exist in the first place. And those are just three, you know, off the top of my head problems with that idea.

And yet at the same time, I don’t actually know how else to go about thinking about the ImageWiki unless I think about it as it’s own thing. Then you start thinking about models of large independant image databases that exist already, examples of this from a product standpoint- references to consider. The Getty Foundation comes to mind. There are many other historical centers that have huge resources and images that are licensed out and used. So here we have a working example of people already doing this. But succesfully? I don’t know. We do have a ton of intellectual property rights and copyright issues and ownership and use issues with images currently. As a working artist these issues for me were a major red flag to consider. Working on the social commons for augmented reality starts paralleling issues found in digital rights management and intellectual property.

Tish Shute: But one good thing about Wikimedia, why I focused on Wikimedia, is Flickr and Wikimedia already use a creative commons licensing, right?

Paige Saez: Creative commons, you know they have their own resource center, too. But you know they haven’t been successful as great databases for images so far.

Tish Shute: What would you like to see that they don’t have? Like say maybe start with Wikimedia, right?

Paige Saez: There’s just still a lot of issues with how to encourage people to want to contribute. It’s hard to show the value to someone who doesn’t already understand the value for some reason. At least for me personally this is something I have run into frequently. I don’t know if it is necessarily what Wikimedia doesn’t have, I think it is a lack of understanding of what creative commons really means. And there is still a very strong sense of ownership and concern about creative property rights. Being paid to be creative is a tremendously difficult thing to do. People fear losing their livelihoods. They think this is possible. Is it? I dunno.

For example : Look at me, I take a photograph of something, I can sell that. And there’s a question about whether or not, as an artist, I want to have my photographs in a pool of images that is open and accessible when I could be making money on it instead. Now that is just an example. Me personally, I can see the value. But that is a common concern. The gist of the question being, ‘what value does it bring to give something away versus holding on to it?’ A hugely popular discussion right now.

This is the same crux of the problem we are dealing with when we talk about thinking about images in the social commons for the real world. It’s a conversation about ownership. It’s about, who does this belong to really? If I take a photograph of a Levi’s billboard, does that photograph belong to me or does it belong to Levi’s? We know the boundaries of that. But when the image becomes a living image, an image capable of transmutation; an image that provokes an action or hyperlinks to a product, experience, information….where are the boundaries in that?

Tish Shute: But how is ImageWiki handling that differently from Wikimedia, I suppose is my question.

Paige Saez: We haven’t solved the problem.

Tish Shute: Yes, I suppose it is not like we have fully solve the problem of a creative commons for images on the internet let alone the issues of a social commons for the real world! So neither one has solved the problem, right?

Paige Saez: Exactly. To be honest, it made my head spin. I realized we were building a web application and a mobile tool doing augmented reality, real time feedback on the world and suddenly we weren’t. Suddenly we were dealing with DNS and talking about physical hyperlinks and ownership and property. And basically at that point you just have to sit and really start looking at catching up on IP issues and figuring out how to deal with that space in a much more wholistic way. It became so important that we had to take a step back and go

“Oh my god I think we have really uncovered a real problem here.â€

At the point when we were building out the tools we realized something was really going on with our project. Here we were thinking that this was just a beautiful experience of learning about the world around us. We really…Anselm and I both just really wanted this tool to exist. It was something that we both just really wanted to happen in the world, something that we felt really just thrilled to make. And we looked at and used it and realized that instead of it just being a beautiful experience, it was a fundamental shift in how we understood everything. That it impacted our world in the same way the Internet impacted our world. It was a fundamental shift in understanding. A sea-change.

So I put down the prototype and went back to researching, read a ton of books on IP and went and presented to friends, family, schoolmates and co-workers trying to explain the project and then the larger conceptual framework that had emerged from the project. I began using the metaphor of thinking about Magritte’s “Ceci n’est pas une pipe.” Thinking about a pipe that isn’t actually a pipe.

Tish Shute: Oh, yes!

Paige Saez: ..to try to help explain to people that the image that you see is actually not, you know, it’s not an image of a thing. It’s an image. And that image has a tone and that image has a voice, and that image was chosen. And there were decisions that were made through the interface of the camera, specific decisions that defined the view of what you were looking at. And that that wasn’t being acknowledged and that that was a fundamental part of what the ImageWiki was aiming to do. The lens that you are actually looking through was as important as what you were looking at. And democratizing that lens became the most important thing that we could possibly do.

Tish Shute: So the emphasis for you on ImageWiki was in fact the lens, even though you found obstacles to creating the interface, right?

Paige Saez: Yes. Definitely. That’s what I fell in love with first. I really wanted to be able to use my phone to learn about what kind of tree this was or to buy tickets for the band on the poster I just saw, or see a hidden secret. For me it was very much a story, a narrative experience that I just thought was magical. And that is how I fell in love with it, which is not where I ended up. Where I ended up was realizing it was a fundamental shift in not only my own understanding of how to use the world around me, but in our understanding of looking at the world.

Tish Shute: It would be pretty scary if an image DNS was basically in the hands of either one or very few people, right? I mean even ImageWiki would be stuck with this problem, that if you set up a bunch of servers, you are going to be holding a very, very large image database. I mean, whatever your motivation, right? I think at the minute that is why I am very into seeing everything through the lens of federation, I see that unless we have federation, these giant central, databases are inevitable aren’t they?

Paige Saez: Essentially, yes. I mean I wasn’t able to walk through it as quickly as that. It kind of just overwhelmed me. Looking back on it, it seems perfectly obvious. I was just like “Oh my god, what have we done? Like what is going on?†Particularly for me because so much of my life has been spent in art, it was really easy to immediately understand the connection between the view, the viewer, and what’s being viewed as all just different layers of ownership and understanding that it is a gaze. Right? We know that we are never able to look at something without passing judgment on it, but to see that become a part of the interface in a real-time fashion just blew my mind.

Tish Shute: Yes.

Paige Saez: I think you are right. Getty Images, Flickr images, no matter what you are always holding on to something and you have to be responsible for it. Right? So how do you deal with the responsibility but don’t take on too much ownership? Where is the boundary with that?

Tish Shute: And for me, the simple answer to that is loosely connected small parts, distributed systems and federation. Because there is only one way to be able to utilize these things is to have them distributed so that no one holds all the cards. Right?

Paige Saez: Definitely and I personally agree with you wholeheartedly. However, the idea of distributed power is a concept that most people just don’t know how to deal with.

Tish Shute: And it’s easier said than done because actually the root problems that you are talking about aren’t got rid through federation, because if someone really holds the, sort of, all the good image databases just because they have the potential to be federated, they may not choose to open them up on many levels.

Paige Saez: And even then you have to think about, sort of, like the next level of it, which is we want it to be all open and accessible, but everything is owned by somebody. Like, what really is public anymore, in general?

Tish Shute: And what is interesting though, regardless of what we speculate conceptually on this, we already set off down the road. I mean we have already several large…they are all in beta I suppose, Google Goggles, Point and Find, right? But we have applications that are beginning to implement this. They are beginning to implement search on it, and it is geo-located even if it’s not in an augmented view, right? So it is proximity based.

Paige Saez: Right, right. I mean maybe the solution is that if we follow that line of thinking then Flickr will be partnering with Google Goggles. And then my images would stay under my ownership through the authority of Flickr. And I would use Flickr as my place to add images and they would just be responsive via my devices via AR.

Tish Shute: That’s very interesting.

Paige Saez: Definitely I think so. It is also the shortest distance between things.

Tish Shute: Yes, and as Anselm kept pointing out, basically it is going to happen in the simplest way possible, really, regardless of the implications of that. But OK, getting back to ImageWiki. As you say neither Wikimedia nor Flickr were really designed to take this role, right?

Paige Saez: Right.

Tish Shute: With ImageWiki, you’ve had these ideas and a concern with the social implications of physical hyperlinking in your mind since it’s inception. Are there any design ideas you’ve come up with that you know, as opposed to sort of, as you say, connecting Flickr to Point and Find, or who knows, Google Goggles. How is ImageWiki going to be different, do you think? Is that a hard question at this point?

Paige Saez: It is, and it’s a great question, and it’s a question I really love to think about. I think we have to introduce the politics with the tools. It has to be acknowledged that it’s not just a place to hold information, that’s what I feel in my heart.

At the same time, is that too much for people to really grasp at one time? In my experience it really has been, so the design of the experience needs to allow for an understanding of the power of the tool and the level of authority that the tool offers, while not getting in the way of it; just using it. Because ultimately, at the end of the day, nobody will use anything if it isn’t valuable to them. And so I could talk for miles and miles and miles about how important it is that corporations don’t own all of the rights to all of the visual things in my life, right? For the rest of my life I could talk about that. The idea that advertising is dominating all of our views of anything in the world around us is horrifying. It doesn’t matter unless I can show somebody why it matters to them or how it affects them. It’s just that that is a tremendously difficult thing to explain through a user interface.

And I actually think that it’s great that tools like Google Goggles and Nokia Point and Find are here to do a lot of the hard work of showing people how it works. Recently somebody explained to me their experience of using Google Goggles. They went through this process of saying how the Google Goggles took a picture and then did this really complicated visual scanning thing over the image and it took a full minute.

And I said, “Well of course they did it that way.†And they said, “Well what do you mean?” I said, “Well, what they are really doing there when they are doing all these fancy graphics, is they are showing you how it works.†And even if it isn’t actually related at all to how it functionally works, algorithmically, that’s not the point. The point is that this gesture of the time taken to make it look like it’s scanning an image and going back and forth with pretty colors is giving people the time to process that as an experience. That’s a metaphor for what’s really happening. And these kinds of metaphors are crucial with user experience design. We have lots and lots of examples of them and how they work, and many of them aren’t necessary. Like you know, for example, the bar that shows you the time it’s taking for something to process.There is no relationship between that and reality. But it is really important.

Tish Shute: Yes those bars often have no relationship between the actual time..

Paige Saez: And that’s the thing. Like the idea of time versus our perceived understanding of time. Right? The length of time it takes for your Firefox browser to open and load your last 30 tabs, versus the reality of what’s actually happening. When you are doing that sort of research you are actually accessing millions and millions of places and points of interest all over the world, so we need more of that. We need more of the process shown. Anselm and I worked with a film maker named Karl Lind from In the Can Productions here in Portland to try and make a video about the ImageWiki. We made this little video and I can try to show it to you or send it to you if you want.

Tish Shute: One of the issues with this kind of visual search is that it is inherently dependent on large databases, regardless of where they are federated, are going to be very large. Right? I mean someone is going to have something big, and aggregated there. I suppose someone will figure out the challenges of federated search eventually but that is quite a big challenge!

So I suppose I am still trying to understand what ImageWiki can offer that we can’t get with any other existing service? How will their be a social commons and even a social contract for the world as a platform for computing and physical hyperlinks?

Eben Moglen brought up something when I talked to him about virtual worlds, he said we need code angels to let us know what was going on in the virtual space – who was gathering data and how, for example.

Paige Saez: Tell me more about that, I want to hear more about that.

Tish Shute: Eben suggested this metaphor for when I was asking him about privacy in virtual worlds. The fact that people just didn’t know that when they were pushing avatars around virtual worlds what metrics were being gathered on their behavior. And he basically said that what we need is code angels when we enter these spaces because having the rules of the game buried in a TOC was ridiculous.

Paige Saez: That is a really interesting idea.

Tish Shute: Maybe ImageWiki needs to be our code angel to navigate the augmented world. I mean that’s what I want to see it as. And when I hear you talk, what I hear is you talking in broad categories about what a code angel might be in the space of images and image links to the physical world. I mean that is what I hear from you.

Paige Saez: Yeah. No, I definitely agree with that. It is interesting. In that sense, it is kind of a protection layer. Is that what you are thinking?

Tish Shute: Yes, I suppose because we can’t be navigating a lot of complicated opt-ins and opt-outs just to get around our neighborhood safely (in terms of privacy (also see Eben Moglen’s definition of privacy here…) We will need a code angel that is sort of keeping up with you in real time!

Paige Saez: Right, right. I wonder how that would work in regards to images, though. That is a really interesting thing to try and put on an image. I guess why I am having such a hard time being specific about it, is I am just trying to work it in my head, thinking of a specific use case, like what would be an example of that?

Tish Shute: Well I suppose the example, and this is a crude one, is when you point your Google Goggles to the book jacket, the code angel, this is very crude, would say “You are right now drawing images from the Amazon database – they are collecting data such and such data from your search.

And then of course the ability to have crowd sourced tagging and corrections..

There was a wonderful book that came out last year on how we can have commercial intelligence -Dan Goleman’s new book: “Ecological Intelligence: How Knowing the Hidden Impacts of What We Buy Can Change Everything”…

how corporations various different stakeholders, including their customers will drive corporations to do the morally right thing because they will lose the commercial support of customers who won’t support them unless they are more green, fairer, do the things we would like them to do whatever that happens to be – physical hyperlinking and tagging I guess would be a big part of this.

Paige Saez: Sort of a transparency issue. And that almost becomes a page rank algorithm in and of itself. I mean now we are really talking about search more than anything, and what tool becomes the dominant search tool. Anselm and I talked a lot about one platform… I mean eventually we will have a unified platform. It will…No matter what, for the Internet and for physical objects and visual objects in the real world. It will just be a matter of, literally, who can find the best and most valuable, most relevant information on a thing. Currently we just have it very proprietary.

Tish Shute: Yes.

Paige Saez: That definitely won’t last. It just can’t, because of the exact problem that you are raising. And we already know too much about resources and information as they pertain to products for us to ever go back to a time where we are not considering other ways of getting information about it anyway. Right?

Like I have the same concerns nowadays when I look at fruit. I look at a piece of fruit in the store. I would never just assume that the person who put the sticker on that fruit, anymore, is the ultimate authority necessarily. I would always assume at this point I could go online and go find out more information about a company. Issues about like eco-footprint or how much toxicity, or pesticides or whatnot are now totally accessible already.

So I am thinking when you look at that piece of fruit and that sticker for Google, say what you are describing, do we just go immediately to the company’s website, or is it even more specific? Do we know that the sticker on that piece of fruit is going to tell us specific information about that? Or are we just getting back the nutritional resources, or are we getting a listing of all of the different options out of a page rank algorithm that shows us, “Well this is the website for the fruit. Here is the nutritional information. Here are the last 15 comments on it.†It’s basically just a basic search.

Have you heard of Good Search?

Tish Shute: you mean http://en.wikipedia.org/wiki/GoodSearch

Paige Saez: Right.

Tish Shute: A code angel interface would have to give you options, wouldn’t it on possible views available?

Paige Saez: Yes. You are then talking about filtering your view. Then it really gets really interesting, of course. I don’t even know if we have a choice in that. I think we are really kind of hitting a wall with who owns the space and the platform. Is it just a basic search because we are already familiar with search? If you had an option to choose, say, “I want to look at this apple sticker and I only want to get…programmatically only looking at my friend’s opinions of this company.â€

Or I have a safety valve on it that only shows me certain information based on what the code angel knows about me, my preferences, my age, things like that. Then that gets really, really interesting, because we are trying to do all that work right now just with social media and the Internet. We are already overwhelmed with too much information. It is already past the point of comprehension. So to think that we would actually drill down even more specifics is very interesting.

Tish Shute: That was a point Anselm made about the fact that once you are into this mobile, just in time, one view kind of situation, it is quite different than the Internet where you can bring up all these different screens and go to another website.

Paige Saez: Well yes, mobile is a different level of engagement. Very contextual. Much less information. Much more about timeliness. I don’t want to look an apple and get back a Google search. Oh my God no. That’s the last thing I want. I would love to be able to look at an apple and my phone already knows exactly what I want, information-wise, to get back from that apple. But I don’t know. It’s all contextual and personal. So I think the code angle concept you are talking about is really interesting because you still need to think about who is the person that is adding or creating those level filters- is it you, a filtered friend network, an algorithm? How much work is too much work? Where do we draw the line? How much of this are we willing to let the machine do for us?

Tish Shute: Right.

Paige Saez: And then of course once you have those filters in place, you need control over them. You will need to dial them up and dial them down, be able to choose and add new ones, so on and so forth. It becomes very modal at that point. For example, I want to change my view: To walk into a grocery store and instead of finding out information, I’d want to see where the hidden Easter egg puzzles were that my friends left last week because we’re playing a game.

I’m still really attracted to the creative opportunities with the ImageWiki. I’m really attracted to changing this experience from being a one-to-one relationship (from Corporation to Consumer) to an open-ended relationship (From Person to Person). If I look at a book jacket, sure I can find out where to buy the book, but that’s boring. Who cares? I’d like to find out a link to a story or an adventure or a movie or something unthought-of before.

How do we build that in? How do we encourage serendipity? Mystery? I think the ImageWiki is the space for building that in, actually. Not how, that would be the one place, right? That’s my really big fear is that this relationship just stays one-to-one. Click an image of consumable object, get back objects retail value. How completely dull. We have to do better than this.

Additionally, what if I want to take a photograph of a book, an apple, or something and I don’t want to pull back data. Instead, I want to pull back music, or I want to pull back a video, or I want to pull back a song, or lyrics, or a story, or another image. It’s just a hyperlink at the end of the day, you know? That’s all we’re really doing. Hyperlinks can pull back so many different things.

Tish Shute: And that’s one of the reasons I’m into mobile social interaction utility building, because without that, if we don’t have that way to do that in mobile technology…that’s very available on the Internet, as we’ve seen, with Twitter. These applications are very easy to do on the Internet. They’re not easy to do natively in a mobile application..

hey, I’m just promoting AR Wave again. I should shut up.

Paige Saez: Oh, no. I think it’s a fascinating concept, I really do. I totally agree. As we’ve talked about it before, it’s amazing that marketing and advertising are helping push forward AR, and it’s great. It’s fantastic.

But it’s also the worst possible thing that could ever happen because it is such a singular way of looking at an overall ubiquitous computing experience. There are other ways.

The best experience I ever had was trying to explain to people about physical hyperlinks. I had to walk them through it. Good interactive isn’t something you present or show, it’s something you do. Nothing beats just walking around and showing people with a device or a tool or something else.

I mean, God forbid it always stays in our computers and our phones. I really hope we don’t have to be stuck living our entire lives with these horrible interfaces. But for the time being, we will. Having an AR app show you a puzzle, or a mystery, or a game, or an adventure is a magnificent experience, totally overwhelming, and people get it right away. There’s no question; they totally understand.

Tish Shute: Yes, I agree.

Paige Saez: You walk them through the experience with a physical hyperlink and then you say, “Here, I could use this device and I could show you where to buy this thing, or I could use this device and we could start playing a game.†Then everybody gets it.

Tish Shute: So then I have a question, because one of the things Anselm said to me when he wanted me to refer back to you is that he feels that the direction for ImageWiki should be perhaps to focus less on the technology and more on just the actual, I suppose, gathering of the images, how they’re going to be annotated, the metadata, right? But my question to him was, the problem if you do that, without the platform, there’s no experience or motivation for people to do that. Right? Is there?

Paige Saez: Yeah, I agree with you on that one. I’m curious what his…I think the reason why he wants to do that is he wants to be able to show people examples via the resources. Like to be able to show someone a library, essentially, which I think makes sense with some people. I definitely think that some audiences would really relate to that. For me, it doesn’t make sense because I’m just very experiential. I need to do it and I need to show other people how to do it and I need to grow that way. I think that at the end of the day, those are great ways to go about doing it. It’s just it’s a huge thing to do in either direction.

What Anselm’s really thinking on, I believe, is more about exemplifying how we read and understand images culturally. Then you’re really getting into Visual Studies and Critical Theory which is what I did for my Masters at PNCA. I worked on the ImageWiki while I was in grad school, it was something I was doing for fun. Independently of my studies, the project lead to issues on democracy and objects and property and I ended up right smack in the middle of what I was studying; the nature and cultural analysis of images Questions like, ‘what exactly do we get out of images?’ and how all these different things are happening in an image, and people get tons of totally different things out of an image depending on many factors.

The questions I began to ask myself got very philosophical. Questions like “Is this apple red? Is this apple red-orange? Is this a small apple? What’s my understanding of small versus your understanding of small?â€

Because you supposed that you needed a text backup to the search, how would I be able to search for an apple? Because what if my understanding of apple is red and your understanding of apple is green. And so if I’m looking for a green apple, am I looking for the same green apple as you? It’s all semantics, sure. But at the same time, it gets bigger and bigger, and it’s fascinating.

Tish Shute: Google Goggles seem to work best on book jackets, basically.

Paige Saez: But book jackets are actually perfect for this. Book jackets are perfect for this problem, because book jackets are specifically designed art. So at the end of the day, we are still talking about creative works, artistic works, that have been designed as a communication tool. But that is not something that people can own. Creative works that are designed are a communication tool, with varying levels of skill to be sure, but still something anybody can do. What we need to do is we need to be using that language. We don’t need to be trying to reach as far as facial recognition. We need to develop our own logos, our own brand, our own…I mean not brand. Brand is a bad way of saying it. Another way of saying it would be like, just use it. Develop a visual language that we can use that is as effective and as well utilized as book jackets or the movie posters or something.

Tish Shute: What are some of the use cases for ImageWiki you would like to develop first?

Paige Saez: My dream…I have like four or five use cases that I want to see happen. One of them is I walk down the street and there is a new poster for my favorite band. And I can just go up to the poster and I use my device, whatever it looks like, and I download the latest album. It’s transactional. I am able to just plug in my headset and walk down the street and the transaction is done. I saw something I wanted. It was beautiful. I was able to get it and I was able to move on in my life. And that is totally possible.

Another one would be I walk down the street and there is a piece of graffiti. And I am able to use my device to find out who the artist was that made it and to give them props, and to point my other friends to the fact that the piece is there and it will most likely be there only for a short period of time- information retrieval and socialization.

Or, use my device to find an Easter egg, to find a narrative puzzle that ends up going on for weeks, and everybody is involved, and we are all playing this game together. Adventure-based, non-linear experiences. I want playfulness, not just purchases.

Tish Shute: Did you think of piggybacking on the Flickr API for geo-tagged photos as a way to work with those databases or not?

Paige Saez: Yeah, we definitely thought about that.

Tish Shute: And why did you decide not to, for any reason or…?

Paige Saez: Ultimately, we just…we were such a small group, we just had to tackle certain things at a certain time.

Tish Shute: Right. And you were so prescient, you were working slightly before we had the mediating devices, weren’t you? You were just before the mobile devices really got adequate for this.

Paige Saez: Yeah. We started on it…I believe it was January…No. December 2007. Basically, the iPhone had just launched like maybe six months prior or something like that.

Tish Shute: But not 3G and not 3GS, right?

Paige Saez: Not 3GS. It was the first generation iPhone. We built the ImageWiki before the App Store existed.

We knew that the App Store was coming out. And we knew that the App Store was going to be the biggest thing in the whole world. I remember getting into multiple fights with friends about how revolutionary the iPhone and the App Store were going to be and people thinking I was totally crazy; people just thinking I was absolutely nuts for being so excited about it.

It sucks that it is a closed proprietary system, but the App Store has done something for software that nothing has ever done in the whole world. Software is candy now. It’s candy. It is like when you are waiting at the grocery store at the checkout line and you are stuck behind somebody, and you have got all these little tchotchka’s, candy bars, magazines, nail-clippers and things. That is the equivalent of software now. It’s become an impulse buy, which is amazing. Nobody would ever have thought…that is actually revolutionary. That’s huge.

Tish Shute: Steven Feiner, who is one of the founding fathers of augmented reality said to me during a conversations at the ARNY meetup that one reason that augmented reality, despite the hype, is manifesting very differently from how virtual reality burst onto the tech scene is that it is about affordable apps on affordable readily available hardware.

March 30th, 2010 at 12:45 am

Another great post and interview, just compiled a couple of articles on the Internet of Things HERE. Feiner is right, but wait until nanoscale electronics really start to kick in, then IoT and AR will be somewhere even science fiction has thought of yet. Thanks for the post.

April 4th, 2010 at 10:28 pm

I referenced this in a post I just completed HERE. One of the most interesting things is the relationship between the Internet of Things and Augmented Reality, they seem to be bound by the same fundamental requirements in both technology and adoption. Thanks for the post.