Blair MacIntyre is one of the original pioneers of augmented reality and an extraordinary amount of creative work is coming out of his Augmented Environments Laboratory at Georgia Tech – see YouTube videos here. The screenshot below is from, ARhrrrr, a very impressive augmented reality shooter game created at Georgia Tech Augmented Environments Lab and Savannah College of Art and Design, (SCAD- Atlanta), and produced on the NVidia Tegra devkits – watch the demo here.

Blair has spent much of his career working on immersive augmented reality and more recently the integration of augmented reality with mirror worlds. Blair explains:

“I am interested in the intersection of mobile devices – whether they are head mounts or handhelds – and parallel mirror worlds…I think that parallel mirror worlds are a direct manifestation of the intersection of the virtual world we now live in (the web) and geotagging. Â As more and more information is tied to place, and as more of our searching become place-based, we will want to do those searches about places we are not at. Â A 3D mirror world may provide one interface to that data. Â Want to plan your trip to London; Â go their virtually and look around, see what is there (both physically and virtually), teleport between areas you want to learn about, and so on. Â More interestingly, talk to people who are there now, and retrieve your location-based notes when you are on your trip.”

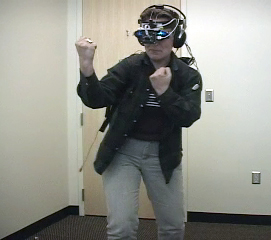

But, at a time when many augmented reality developers are focusing on AR apps for smart phones, including Blair (the picture on left opening this post is Blair’s augmented reality iphone app ARf), I was interested in finding out from Blair what the state of play was for the real deal Rainbow’s End style AR, as well as the potential he sees in smart phones to mediate meaningful AR experiences.

There is enormous amount of innovation in mapping our world, see my post, “Location Becomes Oxygen at Where 2.0 and WhereCamp,” and Ori Inbar’s Where 2.0. conference roundup. But as Ori notes, to move augmented reality forward:

My point is not a shocker: all we need is to tap into this information and bring it, in context, into people’s field of view.

And this is what Blair MacIntyre’s work is all about.

Talking With Blair MacIntyre

Tish Shute: There do seem to be broader implications to augmented reality today than when this term was first coined. I am interested to have your perspective on how augmented reality may go beyond some of our early definitions?

Blair MacIntyre: I still think the original definition of the term is useful: Â media (typically graphics) tightly registered (aligned) with the physical world, in real time. Â Many people talk about many things that relate virtual worlds to places, spaces, objects and people. Â There is room for many of them, and they don’t all have to “be” augmented reality. Â I like using Milgram’s definition of Mixed Reality as everything from the physical world (at one end) to the virtual world at the other; Â it’s a spectrum, and augmented reality just sits at one point.

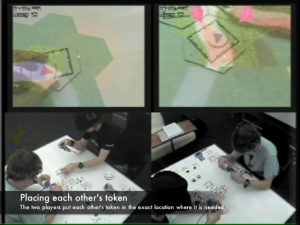

The reason I like the old definition is I believe there is something special about graphics that are tightly, rigidly aligned with the physical world. Â When things appear to stick to the world, and an obviously identifiable location, people can start leveraging their natural perceptual, physical and social abilities and interact with the mixed world as they do the physical world. Â We’ve found this with the two studies we’ve done of tabletop AR games (Art of Defense and Bragfish); Â one key to those games is that the graphics were tightly aligned with identifiable landmarks in the physical world (gameboard).

Art of Defense (pic on left) Bragfish (pic on right)

Tish: I know that you are involved with ISMAR 2009 which is the key US augmented reality conference. What do you think will be the hot themes, applications, innovations at this year’s conference? Do you think this will be the year that AR really breaks out of eye candy into truly useful and sustained experiences?

Blair: Unfortunately, I won’t be involved this year. Â I was supposed to be helping run the technical program, as well as the art/media program, but sickness in my family prevented me from having the time, so I am not helping this year.

First, I would not agree with the implication of the last question — I don’t think AR has just been eye candy up to now. Â I do agree that the “high profile” uses of it have largely been that, which is mostly because of the limits of the technology. Â I don’t think we’ll see huge changes in that regard by ISMAR this year. Â However, we will hopefully see a mixing of communities that hasn’t happened at ISMAR before, and I do believe that this year (independent of ISMAR) we will see more and more AR apps. Â Whether they go beyond eye candy is still a question. Â I’m hoping that some folks (including myself and other ISMAR folks!) will help push AR in new directions. Â But I also expect many folks new to ISMAR and AR to play a big role, because it is this new blood, especially those folks with real problems to solve, new art and game ideas, and a fresh perspective, that will open new doors.

Tish: You have been working on integrating augmented reality with virtual worlds. You mentioned that the way you use Sun’s Wonderland is really about pulling the virtual world into the real world, i.e., Wonderland, “is just a place to put data.” How is your use of the persistent virtual space different from what we have become accustomed to call virtual worlds?

Blair: The approach we are taking in our project at Georgia Tech is to use the virtual world as the central hub of the information space, and allow the virtual world to be the element that enables distributed workers to collaborate more smoothly. Â This is work we are doing with Sun and Steelcase (and the NSF), and is an outgrowth of a project (the InSpace project) that’s been going on for a few years.

What we are trying to do is use mixed reality and ubicomp techniques to pull as much of the physical activity into the virtual world, and then reflect that activity back out to the different participants as best suits their situation. Â So, folks in highly instrumented team rooms will collaborate in one way, and their activity will be reflected in the virtual world; Â remote participants (e.g., those at home, or in a cafe or hotel) may control their virtual presence in different ways, but the presence of all participants will be reflected back out to the other sides in analogous ways. Â We may see ghosts of participants at the interactive displays, or hear their voices in 3D space around us; Â everyone will hopefully be able to manipulate content on all displays and tell who is making those changes.

A secondary benefit, I hope, is that by putting the data in the virtual world and making that the place that gives you more powerful and flexible access to the data (e.g., by leveraging space and giving access to history), distributed teams will begin to have the virtual space become a place they go to work, bump into each other and have those casual contacts co-located workers take for granted.

Creating the Information Landscape of the Future

Tish: At the end of my interview with Ori Inbar he said, in order to have a ubiquitous experience “you’ll need to 3d map the world. Google earth like apps are going to help but it is not going to be sufficient. So let’s leverage people. Google became successful in part by making people work with them. Each time you create a link from your blog to my blog their search engines learn from it. So let’s find ways to make people create information that can be used for AR.” What ways do you think people can create information that can be used for AR?

Blair: I think the big part of that is the creation of models and environments, the necessary “baseline” for specifying experiences.  Google and Microsoft are clearly working toward this;  recent videos from Microsoft show them starting to move the photosynth work toward Virtual Earth.  Similarly, I came across a page where people are finally starting to mine geotagged Flickr [see my post, “Location Becomes Oxygen,” and here for more on the “The Shape of Alpha†project from Flickr] images to create models.  It’s that kind of thing that will be useful first;  using the data we all create to enable modeling and (eventually) vision-based tracking in the real world.

After that, it’s a matter of time till more of what we “create” (e.g., Tweets and blog posts and so on) are all geo-referenced; Â these will become the information landscape of the future, the kinds of things people think about when they read “Rainbow’s End”. Â The big problem will be filtering, searching and sorting. Â And, of course, safety and security.

Tish: You are working with Unity3D to research the integration of mobile location based AR with persistent mirror world like spaces. What has attracted you to Unity? What is the difference between this and your Wonderland project? I know you mentioned. you will be using head-mounted displays are part of this Unity project. What are your goals for this project?

Blair: We started to use Unity3D because it gave us what we wanted in a game engine. Â Most importantly, it’s very open and let us trivially expose AR technologies into the editor. Â Similarly, it can target the iPhone, so we can begin to work with it on that platform, too. Â The biggest problem with creating compelling experiences is content; Â and a show stopper for creating content is not getting it into your engine. Â Unity has a nice content workflow.

Unity3D is a front end engine, for creating the game; Â Wonderland is both a front end, and a backend. Â We are actually looking into using the Wonderland backend with Unity as well. Â Wonderland also has growing support for doing “real work” in a virtual world, which is key to our other projects.

Eventually, we’ll be using HMD’s. Â The goal for the Unity3D project, initially, was to explore what you can do with an AR/VR mirror-world; this is a project are working on with Alcatel-Lucent, and demo’d at CTIA this year. Â It’s continuing to grow, though, and now includes a number of our projects, including some work on mobile social AR and soon, some performance and experience design projects in the area of AR ARG’s. Â It’s really quite interesting to imagine what you can do when you have an “MMO of the real world” (which we now have for part of campus) that supports both VR-style desktop access simultaneously with mobile AR access.

Tish: Have you taken another look at OpenSim as a possible backend for augmented reality? Recently I talked to David Levine, IBM and he is thinking about some possibilities to optimize OpenSim to dynamically load a large amount of objects at once (i.e how fast OpenSim can bulk load into an existing sim) and make it better suited to augmented reality/mirror world type projects.

Blair: I haven’t looked at OpenSim recently. Â We will probably look at it this summer.

Tish: Why did you select Unity as a good client for augmented reality?

Blair: Unity is a 3D game authoring environment so at some level it is no different from using Ogre, if all the associated stuff was just as well done. It has integrated physics, scripting, debugging, etc. – you can write code in javascript or C# or whatever. Â It has a good content pipeline, as well, and supports a range of platforms.

It has simple networking built in, so multiple unity engines can talk to each other but it is not a virtual world platform out of the box – there is no back end …

Tish: Someone described Unity to me as a great client waiting for a great backend? So what are you going to use as a back end?

Blair: There is no real processing except in the client right now. We will eventually have to create a back end. We are thinking of using Dark Star because someone on the Sun Wonderland community forums has already built a set of scripts connecting Unity to Darkstar.

But for us, we are not proposing right now to build a real product. This is research to demonstrate what you could do if you actually had the back end.

Tish: What are the most important aspects of the backend from your POV?

Blair: We want to simulate a variety of the interesting aspects of the back end. So I very much care about notions of privacy and security and how these sorts of AR/VR Mirror Worlds would work in practice. But I care about how those things as they impact user experience, not really about how we would really implement them.

Tish: So looking at some of the big problems from the perspective of user experience? Are we are going to go through the same growing pains that the web and VWs have seen, for example, will we have to type in passwords to get into everyone’s little worlds….

Blair: Well you know the SciFi background to this, you’ve mentioned it in other posts on your blog.  Because when you look at the Rainbow’s End model where you have security certificates flying around, that is in effect what cookies and so on are now. You can authenticate yourself once and then have those certificates hang around. So you can easily imagine how it could be done. But the big question is how does that change user experience. There are all kinds of things that start coming into play – like what happens if nearby people see different things – it goes on and on!

Tish: Sounds Like this is very valuable research. It seems to me that there will be a lot of investment soon in putting the pieces together to do location based markerless AR and it would be nice if we knew more about it from the user experience POV.

Isn’t it vital for a productive intersection between mobile AR and persistent mirror world spaces for us to have markerless AR? Aren’t we right at the beginning of people really saying yeah markerless AR is doable now? But it seems to me not many people researching or working on fully immersive AR and its integration with mirror worlds?

Blair: I think some of the AR community is thinking about this. There’s probably people who are doing stuff in some other non technical communities. It wouldn’t surprise me to find out that people in the digital performance or ARS electronica world who are thinking a little bit about these sorts of things. Although not necessarily at the level of actually trying to build it, because they probably can’t right now. Â But experimenting with the precursors. Â My colleagues in digital media like to point out that this is often the purpose of digital art, to point out new directions and push the boundaries.

Obviously Science Fiction has explored the possibilities because that is what Rainbow’s End and the Matrix were all about.

Tish: and Denno Coil…

Blair: There has been some research – people like my adviser Steve Feiner up at Columbia, Mark Billinghurst in New Zealand, myself and people at Graz University in Austria . But partly it has been so hard to do mobile AR up to now – so many people mock head worn displays and can’t get past current technology – you have had to be willing to ignore the bulky back packs and cables and batteries and so on. That is changing which is good.

My current response to the anti-head-mounted display people is if 5 years ago you told me you told me that fabulously dressed people who care about their looks and wear stylish clothes would have had big things hanging from their ears that blink bright blue light, so they could talk on the phone, many of us would have said you were crazy, because it would be ugly and so on. But because there is an intersection of demonstrable need and benefit…Bluetooth headsets are really useful and the sort of early gestalt feeling that grew up around them – that people who use them are so important that they always have to be in touch, they wear these things – so people accept them.

It will likely be a similar thing with head mounted displays. And I don’t know if it will be that people wearing them so that they can read their mail while driving, god forbid. But it will be something. And when we get the 2nd generation of the wrap glasses that look more like sun glasses and are not bulky and so on, we will have the potential for them catching on because you will look at them and you will think that the person is wearing because they are doing x…

X might be surfing a virtual world or reading their email or keeping in touch, or being aware. It will happen. But they have to get unbulky enough and there has to be more than one important application, not just watching TV.

Picture above shows an outside view of the KARMA AR system;  the knowledge based maintenance system Blair built in his first year of grad school (“first AR system Steve Feiner, Doree Seligmann, and I worked on”). Blair noted, “The Communications of the ACM paper on it (from 1993) is a pretty widely cited AR paper.”

Tish: I think the need for full on transparent, immersive, wraparound, Gucci stylish eyewear with a decent field of view are the elephant in the room in terms of realizing the full potential of augmented reality. There are a few new players in the field Digilens, Vuzix, others? What is the progress in this area and what do you hope for in terms of near term solutions?

Blair: I agree with that sentiment. Â I think that, in the near term, there is a lot we can do with handhelds, as we’ve been doing in the lab. Â However, because it’s awkward and tiring to hold up a device, even a small one, for any length of time, handhelds will only be good for “focused” uses of AR. Â Such as the table-top games we’ve been doing, or the constellation viewing app that I heard came our recently for the Android G1. Â I don’t even see something like Wikitude as that compelling (beyond the “gee wiz” factor) for a handheld form factor. Â Many proposed AR apps only really become compelling when users have constant awareness of them, and that requires a see-through head-worn display.

I’ve seen the mockups of the Vuzix ones; Â they seem pretty interesting, and are getting to were early adopters could use them (they will be cheap enough, and will hopefully be good enough). Â Microvision’s virtual retinal display is also promising; Â the contact lens displays will be the most interesting, if anyone can ever make them work. Â I don’t know of anything else out there.

“its not really a killer app you care about, it is the killer existence that all of the technology and small applications taken together facilitate”

Tish: While location based services are accepted now and people are understanding that it is something that opens up a new relationship to everything, we still haven’t found the experience that will get everyone holding up their mobile devices?

Blair: Well that is actually the killer problem.  Gregory Abowd is one of my colleagues who does ubiquitous computing research here at Tech.  Way back when we started the Aware Home project (Aware Home Research Institute at Georgia Tech) when I first got here about ten years ago, there was always this question of what is the killer app. So Gregory comment in a meeting once that its not really a killer app you care about, it is the killer existence that all of the technology and small applications taken together facilitate. It is not that any one of these AR demos we see right, whether it is seeing your photos in the world or whatever, is important. Its that when taken together, there is enough of a benefit that you would use the whole environment.

In the original context we were talking about an instrumented home, but it is the same thing here with AR.

The problem with the mobile phone as a AR device is that problem of awareness. If I have a head mount on and I walk down the street and there is bunch of probably-not-useful-but-potentially-useful information floating by me, that’s a good thing, because I may see something that is useful or makes me think of something else. But if I have to hold up my phone to see if something might be interesting nearby, I will never hold up my phone because at the time there is a high probability that there won’t be anything particularly important there. You might imagine you can get around this by using alerts or something like that, but then you overload whatever alert channel you use.  For example, I forward maybe 5 or 6 people’s updates from Facebook to my phone – started with my wife, a few friends, my brother, and the net result of that is I never get SMSs’ anymore because when my phone buzzes, usually I ignore it because it is probably just somebody’s random Facebook update. So if we start overloading channels like that with “oh there might be something useful here in the real world, if you pick up the phone and look through it you will see it … and I will buzz you.” People just start ignoring the buzzes.

So it is a very hard problem if you think about the kinds of applications that people always imagine with global AR — names over peoples heads and other random information floating in the world — until you have a head mount and all that information is around you all the time. That is when those sort of applications will actually happen.

Tish: Robert Rice notes: “AR is inherently about who YOU are, WHERE you are, WHAT you are doing, WHAT is around you, etc.” (see my interview with Robert, “Is it ‘OMG Finally’ for Augmented Reality?). And I think the iphone experience has laid the foundation for the increasing desire to experience the network wherever we are – and not be stuck behind a pc. We cannot perhaps do all we want to do yet. But even in the range of things we can do know, we are not even sure exactly what it is we want to do where yet is it?

“imagine your iphone Facebook client supports AR and that all data on Facebook might be georeferenced – pictures, status updates etc…….”

Blair: Yes that is a huge problem. I have been lucky to be able to teach two fun classes this year that let the students and I start to explore some of the potential that handheld AR might bring. Â Last fall I taught a handheld AR game design class — coordinated with a class at the Savanna College of Art and Design’s Atlanta campus — and we had the students build a sequence of prototype handheld AR games, which was a lot of fun. Â This spring I taught a mixed reality/augmented reality design class with Jay Bolter (a professor in the School of Literature, Communication, and Culture here at GT). Â Jay and I have been teaching this class off and on for about 9 years; this semester we decided to say to the students “imagine your iphone Facebook client supports AR and that all data on Facebook might be georeferenced – pictures, status updates etc…….” and have them do projects aimed at such an environment.

Tish: Not many of our favorite social media today have much sense of location do they? But Flickr are utilizing the geo-referenced pictures to create vernacular maps…..The Shape of Alpha

Blair:Yes that is because lots of cameras put geo location data into the exif data so they can extract it…

Some mobile Twitter clients like the one I use in my iphone will let you add your location. But in general Facebook and other sites don’t have any notion of location. But if you look at all the things people do in Facebook, such as sending gifts and other games, its easy to imagine what these might look like with geo-reference data.  So, the high level project for the class is the groups have to design experiences people might have using mobile AR Facebook.  We told them to assume Facebook as it stands now, but add geolocation and AR to the client.  The class boiled down to “What would you imagine people doing?” So it has been kind of fun.

And we are using Unity for the class too – the same infrastructure I am working on in my research linking mobile AR to persistent immersive mirror world type spaces – and we having the students mock up what a mobile AR Facebook experience would be like.

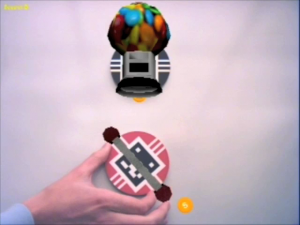

Tish: Can you describe some of the ideas you class came up with that you think have potential? I know Ori mentioned that from the games class he liked Candy Wars.

Candy Wars

Blair: In the end, they had a nice range of projects in the Spring class. Â One created tag clouds out of status messages over spaces, others looked at analogies to virtual pets and gift giving out in the world, one looked at leveraging geolocation to help with crowd-sourced cultural translation, and three groups did straight-up social games.

[See all of the projects from the handheld AR games class on YouTube here]

iphone, Android, or NVidia Tegra devkits or the Texas Instrument’s OMAP3 devkits?

Tish: Is anyone in the class working on Android?

Blair: Nobody is using Android because no-one in the class has the phones. We have ATT microcell infrastructure on campus.  Some ATT people joke that we are better off than them because we have a head office on campus so we can build in the network applications which people even at ATT research can’t do. But because we have this infrastructure on campus, and a great relationship with ATT and the other sponsors, we have the ability to provision our own phones without having to pay for long-term contracts, which is vital for research and teaching.

Tish: So does this lock you into the iphone?

Blair: Well the G1 is of course not AT&T but it is GSM so we could probably buy them unlocked and put them on our AT&T network. But the students I work with are much more interested in the iphone right now.

Tish: Is that because the iphone has the market?

Blair: For me the reason I am not interested in the G1 is because you can’t do AR on it – there is Wikitude and a few other apps, but it is all hideously slow.  Worse, because the Java code isn’t compiled like it would be on the desktop, you can’t do computer vision with it, so you can’t do anything particularly interesting on the current commercial G1s. We could probably take the NVidia Tegra devkits or the Texas Instrument’s OMAP3 devkits (both are chipsets for next gen phones — high end graphics, fast processing), and install Android on those and we may actually do that yet.  But, it seems like a lot of work right now, for not much benefit.

Augmented Reality shooter game ARrrrr from Georgia Tech and SCAD Atlanta on the NVidia Tegra devkits – watch the demo on YouTube here.

Tish: Everyone seems very excited about the iphone OS 3.0 and the addition of compass. Compass is pretty essential for AR right?

Blair: It is necessary if you can’t do other forms of outdoor tracking, but the problem is that the compass on the G1 isn’t very good, relatively speaking and the iPhone one probably won’t be much better. It does not have very high accuracy, nor is it very fast (compared to, say, the high end 3D orientation sensors we use, from Intersense and MotionNode). As far as I can tell, it doesn’t even give full 3D orientation. I don’t have a G1 (although I have pre-ordered an iPhone 3Gs), but people have told me it only has absolute 2D orientation, so you can only line things up if you are careful. Your can’t look around arbitrarily…

Tish: You can’t sweep your phone?

Blair: You can look left and right, but if it doesn’t have full 3D orientation, you can’t go up and down. You can’t tilt it in weird directions. It is not fast in the form that you would want to look around quickly. So it is nice demo. And it is good for what the Android people use it for which is to let you do your Google street view by looking around, which is actually really useful.

I think there are lots of really useful things you can do with such a compass.

And, it is clear that compass is a necessary feature if we want to do AR. Â It’s just not sufficient.

Outdoor Tracking and Markerless AR

Tish: Isn’t it essential for markerless AR? I guess not I just saw this post about SREngine on Augmented Times!

This wasn’t up when we spoke so perhaps you have some comments about what it brings to the table?

Blair: Maybe. The folks at Nokia are working on outdoor tracking, they demoed some stuff at ISMAR last year on the N95 handsets that is all image based. We are trying to do some work with them, one of my students is working on it. And probably Microsoft is going to do more on this as well, they had a video up showing that they are also working on vision based techniques. If you give the phone the equivalent of those panoramic Google Street View images (assuming they are up-to-date) and you are standing at the right place, you don’t really need a compass, you can figure out which way you are looking by looking at the camera video. Ulrich Neumann (USC) did some work on tracking from panorama’s years ago, I don’t know what ever became of it.

Regarding SREngine, that project appears to be a pretty simple first step, but is probably just a demo at this point, and limitations like “only works on static scenes” and “doesn’t work for simple scenes” means it’s probably extracting some simple features out of the image and then matching those to some database. Â The trick would be getting this to work on a large scale, where the world changes a lot. Â It’s not obvious how to get there.

Tish: So forget RFID for AR…

Blair: RFID is not really useful.

Tish: not at all?

Blair: RFID is useful for telling you what things are near you. The problem is it doesn’t give you any directional information – it just tells you you’re in range of the tag. So can use it to tell you when you are near a certain product for example. So it is useful in terms of telling you what thing you are near, and then you can load up a vision system or something else that will recognize that thing.

In that way, it could be useful as a good starting point.

Similarly for computer vision, the compass and the gps are very useful for giving you an initial guess at what you may be looking at that can then speed up the rest of the process. Â But, computer vision by itself will not be a complete solution because if I have my panoramic Google Street view (or whatever image database I use for tracking) and you are standing between me and the building -Â I am not going to see what I expect to see, I am going to see you.

So I think it is all going to be part of one big package – you are going to see accelerometers, digital compasses, and gps and then combine that with computer vision and other sensors, and then maybe we are going to start getting the things that we have always dreamed about. I like to show this video from the U. of Cambridge (work done by Gerhard Reitmayr and Tom Drummond) of an outdoor tracking demo because it gives a sense of what will be possible. Techniques like this will be an ingredient in the future of things. It becomes especially interesting when you have these highly detailed mirror worlds. It is sort of one of those chicken and egg problems where if I have an highly detailed model of the world then techniques like they have can be used to track. But that mirror world needs to be accurate or you can’t use it for tracking, and why would you create the mirror world if you couldn’t track?

Tish: I noticed in your comment to “my interview with Robert Rice” that you said you thought that is was important not to collapse AR into ubicomp – “forgetting what originally inspired us about AR” is, I think if I remember correctly, the suggestion you made. But aren’t ubiquitous computing and AR basically coextensive?

The vision of ubicomp Mike Kuniavsky describes – “sharing data through open APIs and the promise of embedded information processing and networking distributed through the environment” demonstrates how much can be done with very little processing power.” In its most immersive form augmented reality requires a lot of processing power. I think we have all become very conscious about trying minimize levels of consumption. Can you explain why you think people shouldn’t see AR as the Hummer (energy squandering indulgence) of Ubiquitous Computing?

Blair: I think there will be a hierarchy of interfaces. You are going to have the rich Rainbow’s End like experience – you are totally submerged in a mixed environment, if you have a head mount on (its not going to be Rainbow’s End for while) but if you don’t have the headmount on that information might be available to you other ways, whether it is a 3D overlay using your handheld or just a 2D mashup with Google maps. But there will be some circumstances and people who will want to get the compelling experience you can only get with the headmount.

Tish:Â Are you doing any research on how all these hierarchies of experiences will fit together – what aspects of this are you looking at?

Blair: The thing that really needs to happen is you need to have this backend architecture that allows you to collect your data from different sources and aggregate it much like the web. Right now Google Earth and Microsoft’s Virtual Earth are much like the old pre-web hyper-text systems that were all centralized. And what we really need is to have the web equivalent where Georgia tech can publish their building models and I.B.M. can publish their building models and their campus models, and your client can aggregate them, as opposed to Microsoft or I.B.M. puts their building models into Google Earth and then somehow you get them out with Google’s google earth browser. That’s just not going to fly.

Tish: so what does it take then to get us to this backend architecture, because I’m in total agreement?

Blair: The nice thing about augmented reality versus virtual reality is that you don’t need everything modeled. You can do interesting AR apps like Wikitude with absolutely no world model.

Tish: So that means we can start with what we have – utilize cloud services without a full blown backend architecture?

Blair: It may very well be that Google Earth and MS Virtual Earth act as a portal because people go and build models and link them with KML, and they can see them in google earth but they can also download the KML’s through some some other channel. So it may be that those things end up being something that feeds some of this along. Then people start seeing a benefit to having these highly accurate models so then you start integrating the Microsoft photosynth stuff and leveraging photographs to generate models.

It’s just keeping up with it and building it in real time is the challenge. A lot of folks think it will be tourist applications where there’s models of times square and models of central park and models of Notre Dame and the big square around that area in paris and along the river and so on, or the models of Italian and Greek history sites – the virtual Rome. As those things start happening and people start building onto the edges, and when Microsoft Photosynth and similar technologies become more pervasive you can start building the models of the world in a semi-automated way from photographs and more structured, intentional drive-by’s and so on. So I think it’ll just sort of happen. And as long there’s a way to have the equivalent of Mosaic for AR, the original open source web browser, that allows you to aggregate all these things. It’s not going to be a Wikitude. It’s not going to be this thing that lets you get a certain kind of data from a specific source, rather it’s the browser that allows you to link through into these data sources.

So it’s that end that interests me. It’s questions like “what is the user experience”, how do we create an interface that allows us to layer all these different kinds of information together such that I can use it for all my things. I imagine that I open up my future iphone and I look through it. The background of the iphone, my screen, is just the camera and it’s always AR.

I want the camera on my phone to always be on, so it’s not just that when I hold it a certain way it switches to camera mode, but literally it’s always in video mode so whenever there’s an AR thing it’s just there in the background.

When we can do that I can have little alerts so when I have my phone open I can look around and see it independent of the buttons and things that I’m tapping and pushing to use the phone. That’ll be a really a different kind of experience.

Of course it is not known yet if the next gen iphone will have an open video API. Â And of course, the current camera is pretty low quality, so why would they give it an open API until they put in a better camera? Â I am not expecting anything one way or the other until the 3Gs comes out and people start using it.

But there are many things about the iphone 3.0 OS that are hugely important, like the discovery API that allows people to play games with other people nearby, that don’t have much to do with AR.

Tish: You have an iphone AR virtual pet application ARf.

Macrumors wrote it up and suggested that the neg gen iphone will have compass and open video API. What are your plans for ARf?

Blair: ARf is just a demo right now. Â I know what we’d like to do with it, but it would require tons of work; Â imagine what it would take to do a multiplayer, social version of Nintendogs? Â It’s not clear what we’d really learn by doing that, but there are lots of other game ideas we have that we want to explore.

Tish: I think it was on Twitter where Tim O’Reilly said, “saying everything must have a RFID tag is like saying we can’t recognize each other unless we wear name tags. Look at what’s happening with speech recognition, image recognition et.al. and tell me you really think we need embedded metadata.” What would you say to that?

Blair: I think that whatever extra data is there will be used. So if we put machine readable labels on some objects then they’ll be used if they make the identification and tracking problem easier. But it’s pretty clear that people are already working on tracking and so on.

A lot of these mobile AR apps are clearly putting ideas in people’s minds things that won’t really be doable in the near future. Like being able to look down the aisle of the store and it recognize all of the products. Given the distances and complexity of the scene, the number of pixels devoted to each of those objects, and so on – you just can’t recognize things in that context. But if I’m standing in front of a small set of objects, or looking at one thing, or I’m standing in front of a building, or if I’m in the store and because of the location API — imagine an enhanced location API that can tell me within a few feet where I am, and then combine that with some use of the discovery API that allows the store to tell your device you’re in the toothpaste section. Now you only have to look for different brands of toothpaste. So now you can recognize the big letters “Crest” or whatever. It’s all about constraining the problem.

That’s why I like that particular piece of Drummond’s work, the tracking web site I mentioned above. The general tracking problem of looking around and recognizing objects and tracking is still impossible. But if I know roughly what direction I’m looking in and I have a good estimate of my position, and I have models of what I should be seeing when I look in that direction, then it becomes a tractable problem. And so it’s not that a compass and a GPS are 100% necessary. But if you have them it certainly makes things possible that you wouldn’t otherwise be able to do.

Imagine for example if there’s a new version of GPS, I just noticed that some of the new satellites going up have this new L5 channel. There’s the L1 & L2 signals that the military and civilian ones use and they added this civilian L5 signal, which should make GPS more accurate. I haven’t found anything online that says how much more accurate.

But someday, hopefully, all GPS will get to be the quality of survey-grade GPS. Right now, if you get an RTK GPS from one of these companies that make the survey grade GPS systems, they give you position estimates in the range of two centimeters, and update 10 to 20 times a second. When you have that kind of positional accuracy combined with the kind of orientational accuracy you get from the orientation sensors we use in the lab from Intersense and MotionNode, everything is easier because you’ve pretty much got absolute position. You put that into a phone and now when I look up, it’s still not perfectly aligned because there will still be errors (especially in orientation, since the compasses are affected by metal and other magnetic noise). But it does mean if you and I are standing 5 feet apart from each other and look at each other, I can pretty much put a little smiley face above your head. Whereas now, with GPS, if I look at you and we’re 5 feet apart our GPS’s might think we’re on the opposite side of each other because they’re only accurate to two to five meters.

And that depending on the time of day and weather!

Putting RFID tags everywhere is easy; the problem is the readers – they currently require lots of power and they have a limited range. Sprinkling RFID tags everywhere is fine. But you have to be able to activate those tags and read back the signal. In certain contexts it works.

Tish: And one final question! What do you think can be done re beginning to think about standards for AR. Is there a meaningful discussion going on yet? Thomas Wrobel left this comment on my blog rcently and I was wondering what your position was on some of the ideas he raises?

Wrobel wrote, “The AR has to come to the users, they cant keep needing to download unique bits of software for every bit of content! We need an AR Browsing standard that lets users log into an out of channels (like IRC) and toggle them as layers on their visual view (like Photoshop).Channels need to be public or private, hosted online (making them shared spaces) or offline (private spaces). They need to be able to be both open (chat channel) or closed (city map channel) as needed. Created by anyone anywhere. Really IRC itself provides a great starting point. Most data doesn’t need to be persistent, after all. I look forward too seeing the world though new eyes.I only hope I will be toggling layers rather then alt+tabbing and only seeing one “reality addition†at a time.”

Blair: I agree with him, in principle. Â But, I’m not sure there’s a point yet. Â It can’t hurt to try, of course, from a research perspective, and I’m interested in the experience such an infrastructure would enable (as we’ve talked about already).

0 Comments For This Post

7 Trackbacks For This Post

June 12th, 2009 at 10:09 am

[...] Mobile Augmented Reality and Mirror Worlds: Talking with Blair MacIntyre | UgoTrade (tags: gpjinsight augmentedReality) Possibly related posts: (automatically generated)links for 2009-06-02links for 2009-06-05links for 2009-06-08Dell makes millions using Twitter About Kevin Aires [...]

June 18th, 2009 at 9:13 am

[...] casuale di dispositivi portatili lungo la strada (proiezione, accesso [...]

July 28th, 2009 at 9:34 am

[...] Augmented Reality?: Interview with Robert Rice.” And I talked at length to Blair McIntyre on Mobile Augmented Reality and Mirror Worlds recently. These interviews, and my first conversation with Ori, are long in depth conversations. [...]

August 3rd, 2009 at 6:37 pm

[...] I know Blair McIntyre (see my interview with Blair here) and others are using Unity3D as an AR client, Could Unity3D [...]

September 26th, 2009 at 11:44 pm

[...] Blair MacIntyre (see my long conversation with Blair here) [...]

November 28th, 2009 at 2:04 pm

[...] via Mobile Augmented Reality and Mirror Worlds: Talking with Blair MacIntyre | UgoTrade. [...]

December 28th, 2009 at 8:24 am

[...] Best Article / Interview – UgoTrade Interview with Blair MacIntyre [...]