The Points of Control map is interactive, so please click here or on the image above for the full experience.

Today at 4pm EST, 1pm PDT John Battelle and Tim O’Reilly will discuss the Points of Control map and The Battle for the Internet Economy in a Free Webcast:

“More than any time in the history of the Web, incumbents in the network economy are consolidating their power and staking new claims to key points of control. It’s clear that the internet industry has moved into a battle to dominate the Internet Economy.

John Battelle and Tim O’Reilly will debate and discuss these shifting points of control as the board becomes increasingly crowded. They’ll map critical inflection points and identify key players who are clashing to control services and infrastructure as they attempt to expand their territories. They’ll also explore the effect these chokepoints could have on people, government, and the future of technology innovation.”

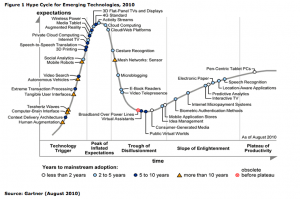

I’ve been wanting to start a discussion on the Points of Control map in the Augmented Reality community for a while now, and Chris’ recent post on the latest edition of the Gartner Hype Cycle, “Is AR Ready for the Trough of Disillusionment?” and this post by Mac Slocum, “How Augmented Reality Apps Can Catch On,” and the conversation in the comments between Mac, Raimo (one of the founders of Layar), and Chris, all prompted me to get a conversation started…(see below for all that followed!). Chris put me on the hot seat back in June when he did this very generous interview with me on Boing Boing, so it was time to turn the tables.

Tim O’Reilly, in his keynote for Web 2.0 Expo, pointed out there is both a fun and a dark side to the Points of Control map. There are companies on this map, he noted, that rather than “growing the pie,” are trying to divide up the pie, and they are forgetting to think about creating a sustainable ecosystem. I expect the conversation between Tim O’Reilly and John Battelle to dig deep into this Battle for the Internet Economy. If, like me, you have another engagement at the time of the webcast, you can register on the site to receive the recording.

AR is still too young to figure in the battles of the giants, but there will be a lot to be learned from this conversation. And, The Points of Control map is good to think with from the POV of AR in many ways. As Chris Arkenberg observed:

“When I look at this map, the points of control map, it’s really interesting to me, because what it says to me with respect to AR is each of these little regions that they have drawn out would be a great research project. So every single one of these should be instructive to AR.

In other words, we should be able to look at social networks, the land of search, or kingdom of ecommerce, and apply some very rigorous critical thinking to say, “How would AR add to this engagement, this experience of gaming, or ecommerce, or content?â€

Looking at each of these individually and really meticulously saying, “OK, well yes, it can do this but how is that different from the current screen media experience, the current web experience that we have of all these types of things?† You know, how can augmented reality really add a new layer of value and experience to these? And I think that process would really trim a lot of the fat from the hopes and dreams of AR and anchor it down into some very pragmatic avenues for development. And then you could start looking at, “Well, OK, what happens when we start combining these?†When we take gaming levels and plug that into the location basin, as you suggested.”

Chris Arkenberg is a technology professional with a focus on product strategy & development, specializing in 3D, augmented reality, ubicomp and the social web. He uses research, scenario planning, and foresight methodologies to help organizations anticipate change and adopt a resilient and forward-looking posture in the face of unprecedented uncertainty. His personal work is collected at urbeingrecorded, and his professional profile is here.

He is also one of the founder/organizers of AR DevCamp which is currently scheduled for Dec. 4th (somewhere in SF or The Valley!)Â Chris said, “No further details atm (still trying to find a venue and get sponsors) but please direct people to http://ardevcamp.org for upcoming information.”

Talking with Chris Arkenberg

Tish Shute: I know some people thought the positioning of AR by Gartner near the peak of the hype cycle was misguided, and based on a very narrow understanding of AR as used in marketing apps. But reading your post I thought you made a lot of good points.

Chris Arkenberg: It’s tracking hype, right? It’s not necessarily tracking the growth of the technologies or their maturation so much as it’s tracking the general attention level. And what’s interesting to me is that tends to affect the amount of money that goes into those technologies.

Tish Shute: I was particularly interested in your post because I have been writing a post about two recent O’Reilly events in NYC, Maker Faire, Web 2.0 Expo, and then Hadoop World, where Tim gave a very interesting 45 minute keynote.  AR was pretty low profile at all three events.  But the NVidia augmented reality demo attracted a lot of attention at the sponsors expo, and Usman Haque, Founder of Pachube announced in his presentation, they are working on an augmented reality interface for Pachube called Porthole, its designed for facilities management and, “as a consumer-oriented application that extends the universe of Pachube data into the context of AR – a ‘porthole’ into Pachube’s data environments.. “ Usman also mentioned, when I talked to him, that he is contributing to the AR standards discussion and on the program committee now for the W3C group on augmented reality. For more on this standards discussion and the Pachube AR interface, see Chris Burman’s paper for the W3C, Portholes and Plumbing: how AR erases boundaries between “physical†and “virtual.”

I think pioneers in the augmented reality commmunity should pay attention to these wider conversations about the Battle for the Internet Economy, and the exploration of the “Platforms for Growth†theme at Web 2.0 Expo is very important- this is a course also a nudge to read my upcoming post on these O’Reilly events!

Also I have another project I have been chewing on that I would like to talk to you about. Â I want to start an AR conversation about the wonderful Points of Control map produce for Web 2.0 summit by John Battelle. [ Note there will be, "Battle for the Internet Economy" free Web2Summit webcast w/ @johnbattelle & @timoreilly Wed 10/27 at 1pm PT http://bit.ly/b46cmb #w2s]

Up to this point, understandably given the immaturity of the technology, AR has little role in the “Battle for the Internet Economy.â€Â But this doesn’t mean that the map isn’t good for AR visionaries, enthusiasts, entrepreneurs, and developers to think with.  And both you and Tim have pointed out the potential for AR to leverage the giant data subsystems in the sky.  I have to say the positioning of Cloud Computing on the brink of heading down into the trough of disillusionment in this recent rendition of the Gartner Hype Cycle seems ridiculous!

Cloud Computing is already ubiquitous hardly seems credible that it is headed for a trough of disillusionment!

Chris Arkenberg: Yeah, it’s ubiquitous so why even talk about it when it’s your fundamental infrastructure?

Tish Shute: Yeah and I seriously doubt it is imminently headed for a trough of disillusionment….and this brings me back to the Points of Control Map which as John Batelle points out, “aims to identify key players who are battling to control the services and infrastructure of a websquared world†in which the “Web and the world intertwine through mobile and sensor platforms.â€Â This instrumented world, of course, creates a great deal of opportunity for augmented reality. Have you seen that, that points of control map?

Chris Arkenberg: I think I have, actually.

Tish Shute: There has been much debate about how this intertwining of the web and the world will play out in augmented reality.  Chris Burman points out in his position paper for W3C, Portholes and Plumbing: how AR erases boundaries between “physical†and “virtualâ€, that “trying to draw parallels between a browser based web and the possibilities of AR may solve issues of information distribution in the short-term,” but it must not have a limiting effect in the long-term.  But now we at least have one web standards-based browser for AR thanks to the work of Blair MacIntyre and the Georgia Tech team. But I think the discussion in the comments of Mac Slocum’s recent post, “How Augmented Reality Apps Can Catch On†is an interesting starting point from which to think about platforms of growth for AR. I am not sure if I am stretching his meaning but I think Raimo, Layar, is suggesting that what the Point of Control map call the the Plains of Media content is very important to the growth of the fledgling AR industry right now. And I would agree with this, and add that the neighboring terrain of gaming levels will be pretty key as one of my other favorite AR start ups Ogmento hopes to reveal in the near future! But what do you think was most important in this brief but pithy dialogue between you Raimo and Mac?

[The screenshot above is a teaser video the Gary Hayes from MUVEdesign for his upcoming (2011 release date), game called Time Treasure. See Gary’s blog for more and Gary’s post from over a year ago on AR Business models. Thomas K. Carpenter, on Games Alfresco notes, “I think this is a terrific idea and I find it interesting he’s planning this on a tablet rather than a smartphone.”

Chris Arkenberg: The way I took it…And to give a little bit of context, I came from sort of this apprehension of augmented reality as an expression of the existing Internet. So as sort of a visualization layer that allows you to kind of draw out data, and then, with all the affordances of being able to anchor it to real world things.

And my own sort of path has led me to want to really try to understand that and refine it, particularly with respect to the sort of Internet of things and the smarter planet idea of just having embedded systems everywhere. And specifically, what is the value-add for augmented reality as a visualization layer of an instrumented world?

And so that’s caused me to be a bit biased towards that side of AR. And the way I took Raimo’s comment was that he was saying that, “You know, really what we’re interested in is media.†That he was effectively saying that AR for them is really just about that space between the screen and the the world, or between your eyes and the world, and what you can do there.

Certainly I had considered it in the past, but I hadn’t really focused on it or assumed that it was a priority as a business model. And so he kind of reminded me that, actually, there’s a lot of entertainment applications. There’s a lot of, obviously, advertising and marketing applications.

And so I felt that I was being a little narrow in my focus…

Tish Shute: Yes this comes to the heart of what I am interested in about the role AR can play in opening up new relationships to the world of data that we live, not just making it more accessible and useful to us when and where we need it, but AR as a road to reimagining it..

Have you seen any interesting work yet to explore these great data economies in the cloud through AR. I mean can you think of any others – there is planefinder.net but others?

Chris Arkenberg: I’ve seen a few just sort of skunk works type applications that people have been playing around with, again, to try and reveal things. One of them was similar to the aircraft, but it was more for military use and being able to identify things of interest in the sky. I’ve seen a couple other for navigation, so being able to identify mountain peaks on a visual plane, for example, but this isn’t so much about revealing an instrumented world.

Tish Shute: Yeah, I think that was from the Imagination right? I know that’s an interesting one. Usman at Web 2.0 Expo, in his presentation, mentioned the work Pachube is doing on an Augmented Reality interface. I interviewed Usman again as my last long interview with him was nearly 18 months ago now and Pachube is well on the way to becoming the Facebook of Data or the analogy that Usman prefers – the Twitter of sensors!

Chris Arkenberg: Hmm, interesting.

Tish Shute: And to go back to your comments on Augmented Reality not getting caught in some of the traps that have made virtual worlds lose relevancy I think that is vital that AR developers understand the strategic possibilities of key points of control in the internet economy because the isolation and Balkanization of virtual worlds were certainly a factor in their rapid slide into the trough of disillusionment – although many would argue that a fundamental flaw in the kind of virtual experience that Second Life and other virtual worlds constructed was really the fatal flaw (see James Turner’s interview with Kevin Slavin Reality has a gaming layer).

But Second Life’s isolation from the other great network economies of the internet was certainly a limiting factor.

Chris Arkenberg: And that’s been exactly my sense, and I’ve, over the years, tried to encourage development in that direction for virtual worlds. I did work, through Adobe, to help develop Atmosphere 3D back in the the early 2000’s. And we did a lot of work to try and understand the marketplace and the specific value-add of doing things in 3D over 2D.

And this is kind of why I keep referring back to VR and VW’s with respect to augmented reality, is that with immersive worlds, there was this idea…there was this big rush. Everybody was so excited about it. It was obviously the next cool thing. And everybody wanted to try to do everything in it. You could do your shopping in virtual worlds. You could have meetings in virtual worlds.

Tish Shute: and shopping, yes ..that didn’t work out so well!

Chris Arkenberg: And everybody was very excited in developing these things. And what it really came down it is, “Yeah, you can, but it’s actually a lot better to do those things on a flat plane or in person.†Meeting Place, WebEx, TelePresence – those tools generally do a much better job at facilitating TelePresence meetings than a virtual world does. The same with TelePresent Education. There are only very specific things that both VR and AR are really good at.

And that’s where I find myself with augmented reality right now, trying to really pick through that and critically look at which uses are really appropriate for an AR overlay. And again, I think that’s why the hype cycle is important, because it reflects back this desire that AR is going to be the next big thing – the be-all, end-all of interacting with data in the cloud – and forces us all to take a critical look at why we should do things in AR instead of on a screen.

AR is not going to work well for most things but it’s going to be very good for certain uses. Right now I’m very keen at trying to understand what those things might be.

Tish Shute: I had this wonderful conversation (more in an upcoming post) with Kevin Slavin one of the founders of Area/Code at Web 2.0 Expo and I think some of what he describes about the data brokerages of High Frequency trading have some interesting implications for AR’s role, say, in ubiquitous computing. The trading markets are now pretty much dominated by machine to machine intelligence; machine to machine brokerages. They are basically game economies on the scale that we can barely wrap our heads around where the speed that bots and algo traders can access the network is the key. We really have no clue what is going on until we lose our house…

Kevin was also interviewed by James Turner on O’Reilly Radar. He talked about how much of the interesting work in location based mobile social apps is defined in opposition to the model of Second Life. He also talked to me about how we are seeing “first life†take on the qualities of “second life.†What goes on the trading floor is largely a performance secondary to a more important world of machine intelligence with giant co-located servers and bots fighting for trading advantages measured in fractions of seconds.

He pointed out how we draw on all these tropes from sci-fi movies, these HUDs based on ideas of machine intelligence where the robot talks to the other robot in English through an English HUD! Many of our current visual tropes for AR are perhaps just as inadequate for the kind of data driven world we live in.

Of course, when you are thinking of having fun with dinosaurs, or illustrated books, or whatever, this is not, perhaps, an issue. But if you are thinking of augmented reality interfaces as being important in a battle for network economy, and platforms for growth, how this new interface helps us live better in a world of data is an important issue.

Chris Arkenberg: Now, does that indicate that the UI just needs more overhaul and innovation, or more that the visual interface for those experiences shouldn’t really leave the screen? It shouldn’t move on to the view plane?

Tish Shute: Yes we have a few concept videos that try and explore this ..

Chris Arkenberg: Well, and I think this will happen at the level of human-computer interface. I mean that’s always been its role, in making coherent the sort of machine mind, for lack of a better term, making it coherent to the human mind. So I mean there is a lot of this sort of machine intelligence, the semantic Web 3.0 revolution, where it really is about enabling machines, and agents, and bots to understand the content that we’re feeding them.

But at the end of the day, they, for now, need to be providing value to us human operators. So there’s always going to be a role for human-computer interface and user experience design to make this stuff meaningful.

I mean, if you look at the revolution in visualization & data viz, this is of incredible value because it takes a tremendous amount of data and collates it into a glanceable graphic that you can look at and immediately comprehend massive amounts of data because it’s delivered in a handy, visual way.

So I see that as a fascinating design challenge, how the user experience of the data world can be translated into meaningful human interaction.

Tish Shute: Yeah. And when we see Stamen Design pursuing a big idea in AR, that’s when we might start to rock and roll, right?

Chris Arkenberg: Yeah. In my article, I sort of jokingly suggested that Apple will create the iShades. But, they’ve got the track record of being way ahead of the curve and delivering the future in very bold forms.

Tish Shute: A key part for the battle for the network economy is to bring the complexity of data into the human realm in a way that increases human agency. Kevin suggests that the giant robot casinos of markets should actually lift off into total abstractions as theses machine-driven trades get back into the human realm in ways that are so damaging to our lives – a lost house or job! The notion of a counterveillence society where people have more agency over the important aspects of their lives, health, housing, job (which I discussed with Kevin – interview upcoming) has gotten pretty tricky!

But I think we will begin to see AR eyewear for specific applications (gaming and industrial) get more common fairly soon – possibly as smart phone accessories.

And it is clear that AR is going to be, increasingly, a part of our entertainment smorgesborg in coming months. Itouch has a camera (although lower resolution), Nintendo’s are AR-ready and many aspects of the AR vision of hands-free spatial interfaces will go mainstream through Natal.

But we are yet to see an app/platform emerge for mobile. Social AR games that turn every bar and cafe and ultimately the whole city into a gaming venue -although I think Ogmento and MUVE aim to lead the way here! Will an AR company achieve Zynga level success by using the Foursquare, for example?

My feeling is that the lesson of Zynga is pretty important for mobile social AR games. Could Flash social gaming have taken off without Facebook?

Chris Arkenberg: And that’s the real driver. And again, as you mentioned with Second Life, and this was exactly my own sense, is that they stuck to the closed garden model and didn’t get the power of social and collaboration. They attempted to add some of those affordances within the world, but, you know, ultimately most people aren’t in virtual worlds, and most people aren’t using augmented reality. So leveraging the really predominate platforms like Twitter and Facebook and Foursquare, being able to leverage those affordances, that connectivity, into a platform like augmented reality, I think, is really critical. Because again, you get nothing unless you have the masses, unless you have people present.

Tish Shute: In AR research there is a long history of the notion of powerful AR-dedicated devices, but smart phones and tablets are good enough, and can launch augmented reality into the heart of the internet economy. I think the elusive AR eyewear will come to us initially as a smart phone accessory for specific apps. But, for the moment, most AR apps make little attempt to play in the wider internet economy.

Chris Arkenberg: And I think it’s actually much lower hanging fruit, really, to do gaming, marketing, transmedia. Because then you don’t really care about the cloud, or maybe you only really care about a little part of it that your gaming property is addressing. Then it becomes much more about entertainment, and much more about persuasion, and sensationalism. And if you’ve got dancing dinosaurs on your street, great! It’s entertaining, it’s cool, it’s new. That stuff is fairly straightforward.

I keep coming back to this idea of, you know, the instrumented city. What sort of data trails do you get out of a fully instrumented city? So maybe you get traffic patterns, maybe you get geo-local movements of masses, maybe you get energy usage, that sort of thing, all the, sort of heat maps you can generate from a city. But then what good does it do to be able to have that on an augmented reality layer versus just looking at it on a mobile device or looking at it on your laptop?

Tish Shute: Of course the use cases for “magic lens†AR are different from the kind of hands free, 360 view with tightly registered media, that a full vision of AR has always promised. The 360 view is quite a different metaphor from the web and mobile rectangular screens.

Chris Arkenberg: Yes, yes.

Tish Shute: Did you see that great parody of Michael Jackson’s “Beat It†with the iPads versus the iPhones, right?

Chris Arkenberg: Oh, really?

Tish Shute: I tweeted it cos i thought it was quite funny and a little close to the bone!

[laughter]

“ur wanna an ipatch 2 b the new fad?” #AR gets cameo in Twitter, iPads & iPhone’s Michael Jackson-Inspired Parody via @mashable

It is hard to get away from the importance of eyewear when discussing AR!

Chris Arkenberg: Yes, so the hardware, to me, is a big stumbling point right now, or it’s a large gating factor, I think, for realizing what an augmented reality vision could really be like. That it really does need to be heads up. This holding the phone up in front of you is fun to demonstrate that it’s possible, and it’s valuable in some ways…

Tish Shute: And it’s particularly nice in some applications like the planes app, the Acrossair subway app where you hold the phone down and get the arrow, right?

Chris Arkenberg: Yeah, the way-finding stuff I think is really valuable…

Tish Shute: Sixth Sense really caught people’s imagination because it managed to deliver the gesture interface with cheap hardware, even if projection has limited uses (no brightly lit spaces or privacy for example!).

The other important and as yet unrealized part of the AR dream is real-time communications. Many interesting uses cases would require this. As you know that is my chief excitement, along with federation, in the Google Wave Servers for (which should soon be released at Wave in a Box) for ARWave.

Chris Arkenberg: Well my sense of Wave is that it was a ChromeOS protocol that they instantiated, or that they exhibited in the public deployment of Google Wave. That that was a proof of their sort of low level architectural solution. Because, you know, they’ve been rumored to be working on this cloud OS for some time. And so my sense is that Wave is actually one of their core components of that cloud OS, and that it just happened to incarnate for the public in a test run as Google Wave.

Tish Shute: I do hope that Wave In the Box will lower the barriers to entry to people experimenting with this technology. The FedOne server was just way too hard for most people to take the time to set up. Of course, it is the brilliance of the Wave Operational Transform work that also poses problems in terms of ease of use. But Wave Federation Protocol is pretty innovative. And could even play an important role in a real time communications for AR eyewear connected to smartphones. The challenges that Wave takes on re real-time communications, federation, permissions and filters are pretty important ones for AR…

Chris Arkenberg: Especially when you’re trying to federate a lot of permissions and filter a lot of data, which all of that gets even more important when you have a visual layer between you and the real world.

Tish Shute: You got it. Yeah!

Chris Arkenberg: I think that’s really valuable real estate, both for third parties that want to get access to your eyes, as well as for you, as the user, who still needs to navigate through the phenomenal world and not be occluded by massive amounts of overhead data.

Tish Shute: Yes, I am sure Google has big plans for the next level of cloud computing and Wave looks at some key challenges. I suppose federation poses some key business problems. I think it was Michael Jones who said to me that it was a bit like socialism in that you have to be willing to give something up for the greater good.

Perhaps federation does not present enough appeal because of its challenges re business models?

Chris Arkenberg: Well, I wonder. I mean there’s got to be some value for their ad platform as ads are moving more towards this personalized experience. Advertising is becoming less of a shotgun blast and more of a very precise, surgical strike. So being able to track user data to such a fine degree to mobilize the appropriate ads around them wherever they are, on any platform, is certainly very valuable to Google and their ad ecology.

Tish Shute: Many people have high hopes that HTML 5 by lowering the barrier of entry for browser style AR could also pave the way for some interesting AR work..

Chris Arkenberg: Well, as much as I would hope that all the different players are going to come together and establish some shared set of standards, really, what’s happening is it’s a rush to the finish line to be the first…to get the most penetration in the marketplace so that Layar, for example, can say, “It’s official. We’re the platform.†And then the consolidation that will follow, where the Googles and the other big players like Qualcomm say, “OK, it’s mature enough. We’ll start buying up all the smaller companies.â€

And that’s where the real challenge is right now is that there are no standards. It’s such an immature technology that you have a lot of different players trying to establish the ground rules. And again, this is one of the challenges that faced public virtual worlds, is that you had a lot of different virtual worlds that weren’t talking to each other in any particular way, and that they each had their own development platform. And so you end up with a very fractured ecosystem or set of competing ecosystems, which is kind of what’s happening with AR right now, where a developer has to choose between a number of different new platforms or hedge by deploying across multiple platforms. Basically, the web browser wars are set to be recapitulated by the AR browsers.

Among them, Layar and Metaio seem to be getting the most traction. But there’s still not a really strong case for a unified development ecosystem to emerge.

Tish Shute: So a discussion of ecosystem development brings us back to the Points of Control Map I think. So what do you see as key points of interest for AR developers to watch in the Points of Control Map? And where do you want to sort of put your bets, right? We are still really waiting for mobile social AR to emerge into the mainstream.

Chris Arkenberg: Yes. And that’s primarily the shortcoming of the hardware itself, but also of the accuracy of current GPS technology. That’s another kind of gating factor, because again, AR wants to be able to express the data within a distinct place or object.

So in a lot of ways, other than kind of what we’ve allowed for the broader entertainment purposes, for AR to really work, there needs to be more resolution in GPS location. So for it to be truly locative…because it’s OK to tell Foursquare that you’re in Bar X. But if you want to be able to draw data directly on a wall within that bar, or do advertising over the marquee on the front, you need more factors to accurately register those images on a discreet location. So that’s another, sort of, aspect of the immaturity of AR, is that it’s still very hard to register things on discreet locations without employing a number of diverse triangulation methods.

Tish Shute: Right. The mobile AR games we see at the moment are really just faking a relationship to the physical world unless they rely on markers or some limited form of natural feature recognition which is really just a more sophisticated form of markers. But the Qualcomm SDK does offer some opportunities to tie AR media to the world more tightly as does the Metaio SDK. But in terms of a mobile social AR game that could be like the Cape of Zynga to FourSquare in Location Basin [see the Points of Control map]… We haven’t seen anything close yet.

AR should be able to bring the check-in mode to any object in our environment.

Chris Arkenberg: Yes, yes. And that’s actually one of the early interests I had in the notion of social augmented reality. I wanted a way to tag my community with invisible annotations that only certain people could read, and found pretty quickly that that’s very difficult to do. I mean you can kind of do some regional tagging, like on a beach, for example, but if you wanted to tag the bench that was on the cliff above the beach, it’s very difficult to do that using strictly locative reckoning.

There’s all sorts of really cool social engagement that can be revealed when people are allowed to attach things to the world around them, to the streets they normally pass through, or the points of interest that they normally engage in. To be able to author on the fly on the streets and attach it discreetly to an object effectively.

Tish Shute: And yes we do have all kinds of markers and QR codes. But Erick Schonfeld of Tech Crunch made a good point that QR codes: “Until QR code scanners become a default feature of most smartphones and they start to become actually useful enough for people to go through the trouble to scan them, they will remain a gee-whiz feature nobody uses.”

Chris Arkenberg: So again, this gets back to competing standards and who gets access to the phone stack, the bundle. Who gets the OEM deal…?

Tish Shute: Yes, the battles for the networks on the Handset Plains are pretty important for AR!

[laughter] I think Layar have made some smart moves on The Handset Plains.

And there are a lot of acquisitions of nearfield technology to look at. If I remember rightly Ebay bought the Red Laser tech from Occipital – now there’s any interesting company. Their panorama stuff rocks!

Chris Arkenberg: Right. There’s a lot of nearfield stuff that’s supposed to hit all of the major mobile platforms in the next year or so.

I mean I think where this is heading, in my mind, is basically smart motes. You know, little nearfield wide-range RFID’s that are the size of a small, tiny square that you could attach to just about anything and then program it to be a representative of your establishment or of an object, that then you can start to tag just about anything. I mean you can’t rely on geo to do it, but if you have a Nearfield chip there that costs maybe like two cents to buy in bulk, and you can flash program it, then you can start to attach data to just about anything.

Tish Shute: Yes ‘cos some things still remain very difficult for near field image recognition technologies like Google Goggles.

Chris Arkenberg: Well, if your phone can interrogate for Nearfield devices, and it detects a chip in its near field, it can then interrogate that chip. The chip may contain flash data on itself, or it may contain the local server in the establishment, or it may go to the cloud and get that data back.

Tish Shute: Yes there is moverment from the top and open source hardware like Arduino has created an opportunity for all sorts of creativity with instrumented environments. And the handheld sensors in our pockets – our smart phones create a lot of opportunity for bottom up innovation too.

Chris Arkenberg: I mean that’s my guess. If you look at what IBM is doing with their Smarter Planet initiative, they’re partnering with a lot of municipalities, and obviously with a lot of businesses and their global supply chains.

But they’re basically working with municipalities and all these stakeholders to instrument their territory, their business, or their city, as it were. So they’re working to provide embedded sensors and the software necessary to read them out and run reports & viz. And presumably that software can extend to include some sort of mobile device to interrogate the sensors and read the data.

That’s kind of a top-down approach of a very large global company working with top-down governance bodies to do this. Simultaneously you have the maker crowd experimenting with Arduino and such to build from the grassroots, the bottom up approach.

And that’s primarily gated by the amount of learning it takes to be able to program these devices, to be able to hack them. Typically, the grassroots creators who make these devices don’t have the luxury of very large budgets to make things highly usable and Wizywig.

So the bottom up community is a sandbox to create tremendous amounts of innovation, because they are unconstrained by the very real financial needs of the top down innovators. And so you get a lot of fascinating innovation, a very rich ecology from the bottom-up approach, but you don’t get a lot of wide distribution. But that does filter up to and inform the top down approach that has a lot more money to put into this stuff. And it ultimately has to respond to the needs of the marketplace.

I mean if there’s an answer to the question of whether something like AR will succeed through the bottom-up grassroots approach or the top-down industry approach, I would say it would be both. That handsets will be hacked to read the bottom up innovations of the maker community, and handsets will be preprogrammed to read the top down efforts of the IBMs of the world.

Tish Shute: Yes but i have to say it is very time-consuming hacking phones (I have just seen a few days suck up in this myself so that I could upgrade my G1 to try out the new ARWave client!). I mean Android has obviously been the platform of choice because of openness but the business model of iPhone and its market share in the US sure make it important for developers. It’s like you don’t exist if you don’t have an iphone app for what you are doing.

Chris Arkenberg: Yeah, and that’s the challenge, because at the end of the day developers prefer not to work for free and a solid, reliable mechanism to monetize their efforts becomes very appealing.

When I look at this map, the points of control map, it’s really interesting to me, because what it says to me with respect to AR is each of these little regions that they have drawn out would be a great research project. So every single one of these should be instructive to AR.

In other words, we should be able to look at social networks, the land of search, or kingdom of ecommerce, and apply some very rigorous critical thinking to say, “How would AR add to this engagement, this experience of gaming, or ecommerce, or content?â€

Looking at each of these individually and really meticulously saying, “OK, well yes, it can do this but how is that different from the current screen media experience, the current web experience that we have of all these types of things?†You know, how can augmented reality really add a new layer of value and experience to these? And I think that process would really trim a lot of the fat from the hopes and dreams of AR and anchor it down into some very pragmatic avenues for development. And then you could start looking at, “Well, OK, what happens when we start combining these?†When we take gaming levels and plug that into the location basin, as you suggested.

Tish Shute: Some of the important platforms for AR don’t appear to have spots on the map like Google Street View and other mapping technologies that hold out so much hope for AR, or am I missing something?

Chris Arkenberg: You mean on the map?

Tish Shute: Yes for the full vision of AR we need sensor integration, computer vision and cool mapping technologies to come together. Do you see where Google Maps and Google Street View… Where would they be?

Chris Arkenberg: Yeah, I mean it’s certainly content, it’s location…

Are you familiar with Earthmine?

Tish Shute: Yes, yes I am, definitely. Earth Mine, Simple Geo, Google Street View, user generated internet photo sets like Flickr all of these could be very important to AR, potentially.

Chris Arkenberg: Well, and the interesting thing about Earthmine is that they’re effectively trying to do an extremely precise pixel to pixel location mapping. So they’re taking pictures of cities just like Street View, except they’re using the Z axis to interrogate depth and then using very precise geolocation to attach a GPS signature to each pixel that they’re registering in their images. Effectively, you get a one-to-one data set between pixels and locations. And so you can look at something like Google Street View, and if you point to the side of a building, in theory, it should know exactly where that is.

They’re rolling this out with the idea of being able to tag augmented reality objects in layers directly to surfaces in the real world. So that’s another approach to trying to get accurate registration and to try and create what are essentially mirror worlds. Then your Google Street View becomes a canvas for authoring the blended world, because if you plop a 3D object into Street View on your desktop, and then you go out to that location with your AR headset, you’ll see that 3D object on the actual street.

Tish Shute: There was some experimental work with Google Earth as a platform for a kind of simulated AR but I suppose Google Earth doesn’t figure in the battle for the network economy as it never got developed as a platform.

Chris Arkenberg: It hasn’t tried to become a platform, to my knowledge. I mean I know some people are doing stuff with it, but as far as I know, Google owns it, they did it the best because they have the best maps, and there’s not a huge ecosystem of development that’s based around it other than content layers.

And my sense of everything else on the Points of Control map is they’re looking more at these sort of platform technologies that…

Tish Shute: Yes, re platforms for growth for AR. Gaming consoles will probably emerge as a significant platform for AR this year.

Chris Arkenberg: There will be much more of a blended reality experience in the living room for sure, and with interactive billboards. Digital mirrors are another area. So I mean if we kind of extend AR to include just blended reality in general, you know, this is moving into our culture through a number of different points. As you mentioned, it will be in the living room, it will be in our department stores where you can preview different outfits in their mirror. We’re already seeing these giant interactive digital billboards in Times Square and other areas.

It’s funny. I mean for me, the sort of blended reality aside, the augmented reality, to me, is actually a very simple proposition in some respects. When I look at this map, augmented reality is just an interface layer to this map in my mind, just as it’s an interface layer to the cloud and it’s an interface layer to the instrumented world. It’s a way to get information out of our devices and onto the world.

Tish Shute: The importance of leveraging existing platforms has become pretty clear but it is interesting Facebook definitely gave Zynga the opportunity but would Facebook be so big without Zinga’s social gaming boost?

Chris Arkenberg: I feel that Zynga has definitely helped its growth…But I think Zynga has benefited a lot more from Facebook than Facebook has from Zynga.

Tish Shute: Zynga certainly proved you could build a profitable business on Facebook’s API!

Chris Arkenberg: They did. And they also really validated the Facebook ecosystem and the platform. They really extended it… Zynga benefited from the massive social affordances that Facebook had already architected and developed. They brought gaming directly into Facebook, and particularly, this emerging brand of lightweight social gaming that when you sit it on top of a massive global social network like Facebook, it suddenly lights up.

Tish Shute: AR pioneers should quite carefully go through this map. There is so much to think about here. I’m a kind of fanatic about Streams of Activity in AR. Real time brokerages and their potential for AR is something I am fascinated by. That is one reason I love the ARWave project.

Anselm Hook, to me, is one of the great thinkers in this area of real time brokerages – with his project Angel, and the work of Ushahidi, which is now the platform for augmented foraging (see here). Anselm is now working on AR at PARC which is exciting.

Chris Arkenberg: Well, there are some challenges working with data streams. Presentation and filtering I think is a big challenge with any sort of stream. Because obviously, you have a lot of potential data to manage, to parse, and to make valuable and comprehensible. So I think this is bound very closely to being able to personalize experiences, or having very discreet valuable experiences. Disaster relief, for example, I think is an interesting idea that ties into the Pachube type of work. Where, if you had the headset and you were a relief worker, and you had immediate lightweight, non-intrusive, heads up alpha channel overlay, waypoint markers showing you all of the disaster locations or points of need, AR becomes extremely valuable, because it’s a primarily hands-free environment. This is why the military stuff is so interesting.

Tish Shute: Ha! We are running into the eye patch/shades/goggles/sexy specs thing again. But filtering and making streams of activity relevant will be very interesting for AR. Again that it why I love the Wave Federation Protocol work because what they have built into their XMPP extensions. You can have your real-time personal data streams, or community streams, or broadcast publicly – the permissions are built.

And Thomas Wrobel’s original vision of these layers and channels is only fully expressed if you have the eyewear.

Chris Arkenberg: Well, and it becomes redundant if it’s on a mobile. To use a very basic example, Twitter, obviously there’s an app you can view those streams of activity on the camera stream. But you can view that real time data on the screen. Why do you need to see it heads up?

The reason I really pay attention to what the military is investing in, one, because they have a ton of money, but also because they tend to represent the core bio survival needs of the species…So, when I look at computing, I see this very obvious trend of computers getting smaller and smaller and closer and closer to us because they’re so valuable to our success. They give us so much valuable information for engaging our world on a moment by moment basis. So, of course now we have these tiny little handheld devices that give us access to the global knowledge depositories of human history, because it’s so useful to have that stuff right at hand.

The only impediment now is that it takes one of our hands, if not both of them, to access it. So if you are in the natural world, which we are all always in the natural world, ultimately, you want your hands free in order to engage with the world on a physical level.

I see computation, or rather, our access to computation is just going to get thinner and thinner, and we’ll very soon move into eyewear, and inevitably, we’ll move into brain computer interface in some capacity.

So when you’re the disaster worker, or a deployed soldier, or the extreme mountain biker, or the heli-skier, or just an adventurer, there are a lot of very practical reasons to have access to information on a heads-up plane. I see AR as being so profound and so valuable, but we’re getting a glimpse of it in its infancy, and it’s got a ways to go to be able to really contain what it is we’re reaching for.

Tish Shute: I agree.

Chris Arkenberg: And that’s been a big criticism I’ve had with all the existing AR implementations that I’ve seen, is that the UI really needs a revolution. It’s very heavy handed. It is not dynamic, even though it’s supposed to be. It does not take advantage of transparencies. It treats the screen like a screen. It doesn’t treat the screen like a window onto the real world. When you’re looking on the real world, you don’t want a lot of occlusion. You want very soft-touch indicators of a data shadow behind something that you can then address and then have it call out the information that’s important to you.

Tish Shute: Now, that’s a very nice kind of image you’ve conjured for me there. Do you see that more could be done on the smartphone than is being done within that? Or are we like waiting for the old ishades?

Chris Arkenberg: I think there’s definitely a lot of room for improvement on the smartphone UI. Nobody’s really played around with it much. And again, I think that’s in part that there hasn’t been a really established platform with enough money to fund interesting UI work. We see it in some of the concept demos that float around every now and then.

I guess it’s both a blessing and curse that I’m always five steps ahead of where I’m trying to get to.

Tish Shute: Yeah, I am familiar with that feeling!

Chris Arkenberg: So I’m always trying to reach for the vision even though it’s a bit distant. I think there’s going to be a lot of development on the handsets. But again, I think we need a lot of refinement. We need a lot of real critical analysis of why this is a good thing.

To get back to the original point of Raimo’s comment, it struck me. And I knew it, but I just had set it aside as gimmickry. But he’s right. Content is a huge driver for this. Just stuff that’s engaging, and fun, and cool, and shows off the technology so they can get enough money to make it through whatever Trough of Disappointment may be waiting.

Tish Shute: Yeah, don’t underestimate the Planes of Content! They are a great place to get interest and money to keep AR technology moving on, right?

Chris Arkenberg: Yeah, yeah. Because, you know, there’s a lot of freedom there. And you can piggyback on all the rest of the content that’s out there and jump on memes and marketing objectives, etc…

And there’s a lot of stuff…I’m blanking on some of the names, but some of these historical recreations of city streets. There’s a street in London where they overlaid historical photos in a really compelling experience. [Museum of London - http://www.museumoflondon.org.uk/] Again, I’m completely forgetting the attributions, but hose are the type of things that can really be pursued on the existing platforms. There is stuff that’s really compelling and really cool.

I heard of another interesting use case – and I should say that I can’t find attributions to this anywhere on the web and I may be paraphrasing or mis-representing the actual work, but I think the concept is worth exploring anyway. But the idea was that you could take the locations of border checkpoints and conflict sites in Palestine and Israel and visually overlay them on an AR layer in San Francisco. And it would do some sort of transposition where you could virtually view these things in San Francisco with the same locational mapping superimposed. So you could see where the checkpoints where. You could see where the wall was. You could see where suicide bombings were and where there had been conflicts. [I cannot find any citations for this!]

Tish Shute: But with an AR view? But why would you use an AR view if you are in San Francisco, then?

Chris Arkenberg: Because it superimposes two realities, translating the Gaza conflict into San Francisco as you are walking around. You can interrogate the world. There’s a discoverability aspect where you’re using the headset to reveal things, or the handset rather, to reveal things that you could not see otherwise in your city. It was done as an art piece, but as a provocative, obviously political art piece.

Tish Shute: Very interesting. I’d love to see that. Because that’s interesting to get away from this idea that you actually have to sort of have this one to one relationship between the data and the world is kinda nice, isn’t it? Well, not one to one, but a very literal…getting away from that literalness is kind of good.

Chris Arkenberg: And that’s a possibility of virtual reality and augmented reality merging, that maybe virtual reality is actually going to do best by coming out of the box and writing itself over our reality, so that as you are walking around, you are no longer seeing San Francisco, but you are seeing part of Everquest or World of Warcraft.

Tish Shute: Well this is where Bruce Sterling gets to that point he made in his keynote for are2010, that if we actually have viable AR eyewear, then you get the gothic stepsister of AR, VR rising from the grave! He asks whether the very charm of augmented reality, is in fact that it adds rather than subtracts from your engagement with the world and that getting get sucked back into the black hole of VR might not be so great.

Chris Arkenberg: And then you get all sorts of interesting challenges to social cohesion if you have a lot of different people experiencing very different worlds, effectively. That if there is no real consensual reality and a majority of your local populous is, in fact, experiencing very different and unique versions of the world, what does that do to social cohesion? How does that reinforce tribalism, for example, when only you and certain others get to opt in to a particular layer view of the world?

Tish Shute: Yes Jamais Cascio wrote an interesting piece on that issue on AR and social cohesion a while back.

An eye patch is a more logical vision than the goggles in many ways but I suppose the loss is stereo vision?

Chris Arkenberg: And actually, there were developments in military helicopter technology many years ago that used a single pane square of glass over the eye mounted to the helmets of pilots. And then they drew various bits of heads-up information on it. So that ensures that you’re having a real strong engagement with the real world, which, obviously, when you’re a helicopter pilot is quite important. But you still have access to the data layer of the invisible world.

Tish Shute: I just went to Hadoop World and I have to say, I was awestruck about how big that’s got. I mean Hadoop has gone from like zero to huge in just a few years. I mean it’s just like now everyone has the power of the Google big table at their fingertips.

What’s the play for AR in the land of search?

I could imagine Hadoop being very powerful tool for AR analytics?

Have you got any thoughts on the land of search and AR? Of course visual search is proceeding at a fast pace and there is a lot of promise for integrations with AR in the future but the latency for visual search is still pretty high?

Chris Arkenberg: In the near term, not a lot. In the medium term, there’s a larger trend towards virtual agents that you can program or teach to keep watch over things for you as an effort to scale down the data overload. So search is something that’s going to become more personalized and more active. There’s a movement to make it so people can essentially deputize these agents to be always searching for them; to be out there looking for the things that they have told these agents are important to them.

So active search for AR I think presents some challenges, obviously because you need to do text input, typically, or voice input. Voice input, I think, is much more achievable than text input for AR. But I can certainly imagine an AR layer that is being serviced by these agents that we have roaming around the web for us reconciling their visual view of the world with our personalizations. AR apps are contextually aware so it knows that if you’re downtown, it’s not going to be giving you a ton of information about Software as a Service infrastructure, or what have you. But that, instead, it’s going to be handing you little tidbits about a particular clothing brand you’ve opted in to follow and information about music venues & schedules, for example. Or perhaps you’ll be on the lookout for other users that have opted in to publicly tag themselves as a member of this or that affinity.

I keep coming back to this idea of AR as really just a simple visualization layer that all of these other technologies can potentially feed into. So in that sense, search becomes a passive thing that AR is just simply presenting to you in a heads-up, hands-free, or potentially hands-free environment.

Tish Shute: Yes, the big challenge is the stepping stones to that point! Small steps that keep interest going into developing the underlying technology (and not just in research labs!) that will bring us that interface. We have seen some movement already with Qualcomm.

Chris Arkenberg: And there are bandwidth issues as well, as we can see with the Google Goggles, which is a great idea of visual search. But you have to take a picture and send it to the cloud and wait for your results. It’s not a real-time dynamic interrogation of the world.

Tish Shute: Yes we are really only at the very beginning of AR being ready for prime time.. it would be interesting to ask AR developers how many of them use AR on a daily basis.

Chris Arkenberg: I think a lot of us, we’re just informed by the sci-fi myths and fascinated with the potential now that’s it’s starting to become real. But I think we all kinda get that it’s still extraordinarily young. I mean the web is extraordinarily young. And AR is itself far younger in a lot of ways in its implementations.

Everybody has a lot of excitement about all of the great potentials that are being unleashed by this great wave of the Internet and the web and ubiquitous mobile computing. So that’s why, you know, you look at that map and we talk about AR and you can’t talk about any of the stuff without talking about all of it, in a lot of ways, particularly with something like AR where it’s so ultimately agnostic and could be completely pervasive across all of these layers.

So my fascination is with the future, and I measure our progress towards it by the young nascent offerings from the platform players and the developers. And yeah, a lot of it is…it’s akin to getting that first triangle on the screen in 3D. You know, when the renderer finally works and you get a triangle on the screen, and you go, “Oh my God, it renders.†And then you can start to really build polygons and build objects, and start doing boolian operations, and get light and rendering in there, and textures, and on, and on, and on.

So I’m fascinated by the Layars and the Metaio’s…

[laughter]

Tish Shute: Yes and hats off to all the players in the emerging industry, Layar, Metaio, Ogmento, Total Immsersion, and all the others who are finding clever ways to bring fun aspects of AR into the mainstream, and fuel interest to take the technology to the next level.

Chris Arkenberg: Absolutely. And the hype cycle is very valuable. It has really helped launch the AR industry. It’s brought a lot of eyes, and it’s brought a lot of money into the industry. And it’s forcing people like us to have these conversations to understand how to refine its growth and really focus on the potential in all these different venues, whether it’s trying to save lives, or better understand your city, or have really compelling entertainment experiences.

Everybody’s excited, and everybody’s sharing, and everybody’s trying to move it forward in a way that’s the most productive.

0 Comments For This Post

3 Trackbacks For This Post

October 27th, 2010 at 12:27 pm

[...] Shute over at UgoTrade was kind enough to post a conversation we recently had about augmented reality, the Gartner Hype Cycle, and the O’Reilly Web 2.0 Points of Control map. Chris Arkenberg: [...]

October 31st, 2010 at 2:37 pm

[...] “AR is not going to work well for most things but it’s going to be very good for certain uses. Right now I’m very keen at trying to understand what those things might be”. Tish Shute interviews Chris Arkenberg. [...]

October 31st, 2010 at 4:29 pm

[...] community to discuss the Points of Control map.   See my discussion with Chris Arkenberg here, Platforms for Growth and Points of Control for Augmented Reality. The recording of John Battelle’s and Tim O’Reilly’s webcast on Points of [...]