Wilfred Pinfold, Director, Extreme Scale Programs for Intel, and the Supercomputing Conference general chair, is working with some Intel colleagues to make a project called ScienceSim the centerpiece of a special workshop event at the SC09 conference (see Supercomputing Conference, an ACM and IEEE Computer society sponsored event).

Recently, I interviewed Wilf Pinfold (see interview below), Mic Bowman (also see my previous interview here), and John A. Hengeveld (see interview below). I wanted to find out what are the underlying goals of this SC conference program? Why are members of the SC community being encouraged to participate with the ScienceSim environment? What projects are beginning to emerge? And, what are Intel’s goals in giving infrastructure support to further the conversation between high performance computing and collaborative virtual worlds?

The vision of creating new ways to collaborate and interact with big data does seem to be one of the more significant steps we can take at a time when we find many of our most complex systems roiling and threatening total collapse. As Tim O’Reilly has pointed out – from financial markets to the climate, the complex systems we depend on for our survival seem to be reaching their limits.

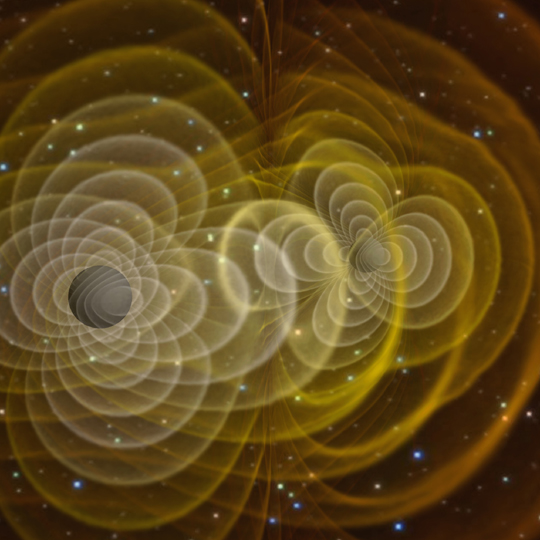

But, how can we get from the place we are now – see this example of an n-body simulation in OpenSim, to the point where we can collaboratively steer from our visualizations big data simulations of climate change, financial markets, or the depths of the universe. The picture opening this post is a:

Frame from a 3D simulation of gravitational waves produced by merging black holes, representing the largest astrophysical calculation ever performed on a NASA supercomputer. The honeycomb structures are the contours of the strong gravitational field near the black holes. Credit: C. Henze, NASA

Wilf Pinfold explained to me part of the reason to begin a dialogue on collaborative visualization at SC ’09 is that super computing communities (that tend to be highly skilled and visionary) have played key roles in internet development in the past. Wilf pointed out, key browser technology developed out of these communities in the early days of the internet – see this wikipedia entry that gives a background on the role of NCSA (National Center for Supercomputer Applications).

The hope is, while there are many obstacles to overcome, the super computing community has both the skills and motivation to find solutions to creating collaborative environments capable of the kind of rapid data movement that scientific/big data visualization needs. Solving the problems of realtime collaborative interaction with big data will have many ramifications for the way we understand virtual reality, the metaverse, virtual worlds (all these terms are becoming increasingly inadequate for cyberspace in the age of ubiquitous computing, an argument I will make in another post!).

There have already been a number of blogs on ScienceSim (see Virtual World News, New World Notes, Vint Falken, and Daneel Ariantho). There have also been Intel blogs – see this post by John A. Hengeveld (a senior business strategist working with Intel planners and researchers to accelerate the adoption of Immersive Connected Experiences). And Intel CTO Justin Rattner’s post announcing the project this November.

But to blow my own horn a little, I think i was the first to blog the encounter between OpenSim and Supercomputing (an encounter I to some degree provoked by making the introductions) see this post. So I have been following the ScienceSim initiative with great interest.

Very shortly after N-Body astrophysicicsts Piet Hut and Jun Makino, creators of – GRAPE (an acronym for “gravity pipeline†and an intended pun on the Apple line of computers) – a super computer that will become one of the fastest super computers in the world (again), met Genkii – a Tokyo based strategic company working with OpenSim, the first N-body simulation appeared in OpenSim. And in a matter of weeks this video went up on YouTube – the result of a collaboration between MICA and Genkii. But the nirvana of being able to create visualizations using real time data from super computers that can be steered from a collaborative environment is still a ways off.

Super computing communities tend to be geographically very dispersed and researchers often find themselves far from simulation facilities so there is both the motivation and skills to pioneer new tools for collaborative visualization. I know that astrophysicists certainly see their value (Piet Hut has some profound ideas on this). Astrophysicist Piet Hut and others (see here for more) have been pioneering the use of VWs for collaboration. There are two Virtual World organizations, both founded by Piet Hut and collaborators, that are currently exploring the use of OpenSim for scientific visualizations.  One is specifically aimed at astrophysics, MICA, the Meta Institute for Computational Astrophysics, and the other is aimed broadly at interdisciplinary collaborations in and beyond science, Kira, a 12-year old organization focused on `science in context’.  As of last week, there are two weekly workshops sponsored jointly by Kira and MICA that explore the use of OpenSim, ScienceSim, and other virtual worlds.  One of them is “Stellar Dynamics in a Virtual Universe Workshop” and the other is “ReLaM: Relocatable Laboratories in the Metaverse.”

MICA was founded two years ago by Piet Hut within the virtual world of Qwaq Forums (see the paper “Virtual Laboratories and Virtual Worlds”). The Kira Institute is much older: it was founded in 1997. Â Later this month, on February 24, Kira will celebrate its 12th anniversary with a presentation of talks, a panel discussion, and a series of workshops. Â See the Kira Calendar for the main event, and the Kira Japan branch for a special mixed RL/SL event in Tokyo. Â During both events, Junichi Ushiba will give a talk about his research in which he let paralyzed patients steer avatars using only brain waves.

Other early adopters of ScienceSim include Tom Murphy, who teaches computer science at a Contra Costa College. Prior to teaching, Tom spent 35+ years working for supercomputer manufacturers. Tom said:

it is very natural for me to find significantly new ways to visualize and interact with scientific mathematical models via ScienceSim and the OpenSim software behind it. ScienceSim also allows us to interact with each other and teach students in new ways.

Also Charlie Peck, chair of the SC09 Education Program, (his day job is teaching computer science at Earlham College in Richmond, IN), is working with Wilf Pinfold, Tom Murphy and others “to explore how 3D Internet/metaverse technology can be used to support science education and outreach.”

Cristina Videira Lopes, University of Irvine, is doing very interesting work on road and pedestrian traffic simulations. Crista is also the creator of hypergrid in OpenSim,

People Meet People Meet Data: A Conversation With Mic Bowman

Screenshot of ScienceSim from Daneel Ariantho

Tish: How does this work on ScienceSim fit into a wider dialogue on linked data? Where people meet people meet data, and where data meets data?

Mic: Yeah… that’s hard by the way. Open integration of data (and more interestingly the functions on data) is very hard if it comes from multiple, independent sources.

That’s the people part. For example, if Crista can build a model of the UCI campus somebody else builds an accurate model of several cars and another expert provides the simulation that computes the pollution generated by those cars in that environment…its bringing people together to solve real problems, no matter how far apart physically.

Tish: You mention three different simulations here. Could you explain why it is difficult to integrate data from multiple sources?

Mic: integrating data from multiple sources has always been one of understanding & interpreting both the syntax & semantics of the data. Even relatively simple things like multiple date formats require explicit translation. More complex formats, like the many formats data is represented for urban planning, are barely computable independently let alone in conjunction with data from other sources (each with its own representation for data). Its often the expertise & the collaboration of bringing people (and their bag of tools) together that solves these problems.

Tish: and in this case the bag of tools is high performance modeling..?

Mic: high performance modeling, rich visualizations and data. Its the three that matter… data, function, and interface.

Tish: Some people have a very hard time wrapping their head aropund the fact that anything that seems related to Second Life can do this. Can you explain more about the difference between SL and OpenSim?

Mic: OpenSim potentially improves data & function because it can be extended through region modules. Region modules hook directly into the simulator to provide additional functionality. For example, a region module could be implemented to drive the behavior of objects in a virtual world according based on a protein folding model.

We need to work on additional viewer capabilities to address the user interface limitations.

Tish: Yes Rob Smart’s (IBM) recent data integrations with OpenSim (see here) are impressive. Re viewers one of the biggest objections to virtual worlds is the mouse pushing and pc tied interface.

Mic: There are great opportunities for improving the interface

Tish: Yes I really like where the Andy Piper’s experiments with Haptic Interfaces for OpenSim lead, see Haptic Fantastic! And I think that we will have cyberspace ubiquitous in our environment, not just stuck on a pc screen, sooner than we think.

Mic: Mic’s opinion (not Intel): until we get souped up sunglasses with HD screens embedded (or writing directly into the eye) there will always be a role for the PC/Console/TV). But, it isn’t about the device… its about the services projected through the device… sometimes you’ll want a very rich experience… sometimes you’ll want an experience NOW wherever you are.

Tish: I think people are only just realizing that VWs will be a now and wherever you are experience very soon.

Mic: That’s the critical observation the virtual world is not an application you run… its a “placeâ€â€¦ and you interact with it where you are or maybe interact through it. Speaking for Intel… it is the spectrum of experiences that are critical to support.

Interview with Wilfred Pinfold

Picture from National Science Foundation – “Climate Computer Modeling Heats Up.”

Tish Shute: I know your day job for Intel is in High Performance computing. Could you explain to me a little bit more about what you are working on in this regard – a mini state of play for high performance computing from your perspective?

Wilfred Pinfold: My title is Director, Extreme Scale Programs. This program drives a research agenda that will put in place the technologies required to make an Exa (10^18) scale systems by 2015. The current generation of high performance computers are Peta (10^15) scale so this is a 1000x increase in performance and this increase will require significant improvements in power efficiency, reliability, scalability and new techniques for dealing with locality and parallelism.

Tish: The nirvana in terms of linking supercomputers to the collaborative spaces of immersive virtual worlds is to be able to create visualizations using real time data from super computers in collaborative VW environments, and ultimately for researchers to be able to collaborate and steer their simulations from their visualizations. Where are we at now in terms of scientific data visualization in VWs? And what are the current obstacles to using realtime data from super computers?

Wilf: Being able to steer a simulation from a visualization requires both a visualization interface that allows interaction and a simulation that operates at a speed that is responsive in interactive timeframes. For example a weather model that predicts the path of a hurricane would need to operate at something close to 1000x real time. This would run through a day in ~1.5 minutes allowing an operator to run the simulation over several days multiple times with different parameters in a single sitting to understand the likelyhood of certain outcomes?

Tish: Do you see a networked online collaborative virtual world being capable of being a visualization interface that allows meaningful interaction with the hurricane scenario you describe in the near future (next 6 to 18 months)?

Wilf: I was using the hurricane example to explain the usage model not an imminent capability. Hurricane Simulation: Accurate hurricane simulations require multiscale models able to resolve the global forces working on the storm as well as the microforces that define precipitation. We can build useful weather models today that run faster than real time (anything slower is not useful for prediction) but we are a long way from the ideal.

Visualization: There are excellent visualizations of weather systems but I have not yet seen a virtual world that can track a simulation and allow the scientist or team of scientists to see what is going on at both the macro scale and zoom in to see precipitation conditions. Today’s supercomputers are much better at this than they were a few years ago but they are a long way from ideal.

Tish: Open Source Virtual World technologies are pretty diverse in their approaches, Croquet, Sun’s Wonderland and OpenSim are quite different and have different strengths and weaknesses. As you have become more familiar with OpenSim, what have you found about the technology that particularly lends itself to this project – ScienceSim (Mic mentioned Crista’s hypergrid code for example, modularity is another feature often cited).

Wilf: We have found OpenSim’s client server model is well suited to the visualization model and the ability to put the server next to the supercomputer producing the visualization data is critical. We are however very interested in other environments and encourage papers, demonstrations and research on any of these platforms at the conference.

Interview with John A. Hengeveld

Tish Shute: OpenSim’s dependence on Second Life based viewers is sometimes cited as a limitation, and sometimes as a strength. What are your views on this? What would a strong open viewer project directed at science applications bring to the picture?

John Hengeveld: There may be more than one strong open viewer project required for opensim compatible experiences. The strength of the Hippo viewer, for example, is availability and its weakness is the size of the client. We would love a ubiquitous, client.. that runs on all platforms, but each hardware platform brings tradeoff and restrictions of its own. Today, probably all of the folks innovating in the space can deal with the size of a very fat rich client ap.. they have big computers anyway. But as we get into more 3D entertainment and augmented reality applications.. virtual mall, collaboration apps.. etc… there is a great deal of room to optimize for the specific experience. Balancing visual experience with bandwidth and compute performance available .. tying into standard browsers, etc… people have done some of this work.. and I think all of it adds to the usefulness of these worlds.

Tish: Integrating highend game engines and OpenSim opens up new possibilities. But licensing issues have been an obstacle. Could a project like ScienceSim get a non-commercial license on a high end game engine? What would that bring to the picture?

John: Anything is possible. Game engines can give a great deal of design power for high value experiences, but the programming of these experiences must be simplified. Mainstream adoption in enterprise can’t be premised on the programming model of studio games… that’s a big step to get over I think. There are very interesting possibilities when we take that step tho. Simulation, training, agents of various types (I just finished watching “The Matrix†for like the billionth time… I think agents are cool…)

Tish: Where does Larabee fit into the picture of ScienceSim and next generation virtual worlds?

John: We are all very excited about the Larrabee architecture and its application to work loads like next generation virtual worlds, both in the client.. delivering immersive reality.. and someday potentially in a distributed architecture simulating and producing these worlds. For Intel CVC is an all play. Atom will be used in strong mobile clients. Core will be used in Enterprise PCs, Laptops and Desktops  Xeon will be simulating these environments and handling the data communication, and Whatever we brand Larrabee… will be enabling compelling visual experiences. Oh.. and our software products (Havoc, tools and others) will be building blocks in knitting all this together. Larrabee is a part, but there are a lot of other pieces in our vision…

Tish: If the kind of rapid data movement that scientific visualization needs is achieved in virtual worlds, this will be quite a game changer for business applications of VWs too. Also it will blurr the boundaries between what we call virtual worlds and mirror worlds. It seems to me this kind of rapid data movement is a vital step towards what Mic described to me as Intel’s vision of CVC: “Connected Visual Computing is the union of three application domains: mmog, metaverse, and paraverse (or augmented reality).†It almost seems to me that if you achieve your goals for ScienceSim you will change how we think about virtual worlds in general? What do you think?

John: I certainly hope so.. Part of our goal is to stimulate innovation in the technology and usage models that will enable broad mainstream adoption of CVC based applications (what we categorize as immersive connected experiences).  By tackling the scientific visualization problem, we hope to find the key technology barriers and encourage the ecosystem to solve them.

Tish: To me virtual worlds and augmented reality should be complimentary and connected experiences. How do you see this connection evolving?

John: We certainly see them as related. In the long term, there are many common building blocks.. but they aren’t united per se. Its about the user experience, and in some usages these two are almost identical… in some.. they don’t look or feel at all alike… the viewer is distinct by a lot. Our approach is to enable building blocks that people can quickly build out usages that are robust.

Tish: What is Intel’s vision for ubiquitous mobile computing and an internet of objects? How can high performance computing be an enabler for this vision?

John: Mobile computing is a central part of our life, culture and community in economically enabled economies. It feeds the data of our decisions, it connects us to entertainment, it is the access point to our soapboxes, pulpits, economy and families. This creates a massive increase in data, a massive increase in interactions, transactions and visualizations. While many HPC applications will be behind the scenes (finance, health, energy, visual analytics and others), HPC will emerge as a part of a scale solution to serving some of this increase… particularly that part where interactions and visualizations are complex or compelling.. or where scale enables the usage per se .. I talked about my love of agents earlier.. and some of that comes in here. Compute working behind the scenes to help managed the data complexity, manage some of the base interactions between ourselves and technology. The other thing we talk internally about the “Hannah Montana usage†where millions of people use their mobile devices to access and participate (using the sensors in the device) with an interactive live concert. When Mylie hears the applause of a virtual interactive audience… and can scream back at them.. we’re there. Access to ubiquitous compute will be mobile, and interactive experiences will be complex.. and HPC can help make that real. Watch out for the mental trap that HPC is always high end super compute clusters tho… the “mainstream HPCâ€.. smaller clusters… high threads, etc… will play a key part in all of this as well.

Interesting that John ended on this point as this just came in from Wired.

May 27th, 2010 at 8:26 pm

Mobile computing is on the rise these days. Maybe we will get a dual core powered cellphones in the future.*;,

July 26th, 2010 at 12:14 pm

mobile computing nowadays is not yet very powerful compared to netbooks but time will come that it would become like that.~’-

September 12th, 2010 at 12:42 pm

mobile computing always have a growing trend in the succeding years’~*

December 20th, 2010 at 2:36 pm

we need some smaller and energy efficient microprocessors to support mobile computing :”`