Images from Mitsuo Iso’s Denno Coil (Click to enlarge), the game “Metroid Prime,” and Terminator.

Thomas Wrobel, Sophia Parafina, Joe Lamantia, Matthieu Pierce, and I will lead a  session tomorrow for AR DevCampNYC introducing the AR Wave Project. Thomas, Joe and Matthieu will be participate via skype (10am to 11.30am EST), and Sophia Parafina and I will both be at AR DevCampNYC at the The Open Planning Project office (TOPP). The PyGoWave crew will be introducing PyGoWave via LiveStream.

At 1.30pm EST to 2.30pm EST there will be a shared PyGoWave/AR Wave session with Mountain View (if bandwidth permits).

The skype conference will be at ardevcampnyc .  To participate in Wave, please join the public Wave,  AR Wave: AR DevCamp Session.  There is also a AR Wave Wiki up now – see here.

Dimitri Darras (avatar Dimitri Illios) is working on streaming the AR DevCampNYC sessions into Second Life, SLURL here.

Thomas has done a very nice introduction and FAQ below. This should help people new to this project to get up to speed quickly.

There are already several Waves that show the history of this project including: AR Wave: Augmented Reality Framework Development, AR Wave Use Cases, PyGoWave AR Tech Discussion, AR Wave Augmented Reality Wave Development, AR Wave / Muku Organization and Admin.

Also I have several posts for people interested in more of the background, including: The Next Wave of AR: Mobile Social Interaction Right Here, Right Now!, AR Wave: Layers and Channels of Social Augmented Experiences, Total Immersion and the “Transfigured City:†Shared Augmented Realities, the “Web Squared Era,†and Google Wave.

Thomas uses the term Arn (augmented reality network) which is one of the candidate names for the project, Muku (crest of a Wave) is another suggestion. Thomas’ intro and FAQ below can also be found here.

What is the AR Wave Project?

In simple terms its a protocol for storing geolocated data on Wave servers that’s currently being developed.

We believe this will help lay the foundations for an open, universally accessible, and decentralised system for shared augmented reality overlays which various clients can connect to and use.

This AR Network should spark a lot more rapid adoption of AR technologies, give existing browsers more functionality, and provide the network infrastructure, allowing many of the fictional depictions of AR to become a reality one day.

The AR Network.

When we speak of a future AR Network, we mean one as universal and as standard as the internet. One where people can connect from any number of devices, and without additional downloads, experience the majority of the content.

Where people can just point their phone, webcam, or pair of AR glasses anywhere where a virtual object should be, and they will see it. The user experience is seamless, AR comes to them without them needing to “prepare†their device for it.

The Arn should be an inclusive and open platform where any number of devices can connect to, and anyone can make and host their own location-specific models or data.

It should allow people to communicate both publicly and privately, and not have their vision constantly cluttered with things they don’t want to see.

This is our vision, and we think a Wave protocol will help it become a reality.

Why Wave?

Wave allows the advantages of both real-time communication, as well as the advantages of persistent hosting of data. It is both like IRC, and like a Wiki. It allows anyone to create a Wave, and share it with anyone else. It allows Waves to be edited at the same time by many people, or used as a private reference for just one person.

These are all incredibly useful properties for any AR-experience, more so Wave is open. Anyone can make a server or client for Wave. Better yet, these servers will exchange data with each other, providing a seamless world for the user: a single login will let you browse the whole world of public waves, regardless of who’s providing or hosting the data. Wave is also quite scalable and secure: data is only exchanged when necessary, and will stay local to just one server if no one else needs to view it.

Wave allows bots to run on it and thus allowing blips in a waves to be automatically updated, created or destroyed based on any criteria the coders choose. Wave even allows the playback of all edits since the wave was created.

For all these reasons and a few more, Wave makes a great platform for AR.

How?

In basic terms, we will diverse a standard way to geolocate a bit of data and store it as a Blip within a wave.

This data could be a 3d mesh, a bit of text, or even a piece of audio.

Then various clients on various devices could logon, locate, interpret and display this data as they see fit.

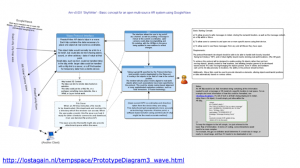

Click on image above to enlarge.

A typical example of this might be holding up your phone and seeing messages written by your friends and family in the locations which they are relevant.

You could see an arrow hovering over the café your meeting a friend at, notes above their flat saying if they are in or out, or messages by shops telling you to pick up the particular brand of cereal they like.

This data would be personal to just yourself and whoever you invite to share that wave with.

Other forms of data could be public, like city-maps, online games, or historical landmarks being recreated. Custom views of the world with data for entertainment, commercial, environmental or informative purposes.

The possibilities with geolocated data are endless, as are the various ways to display and make use of them.

One of the things I’m most passionate about is people being able to see many different types of data, both public and private at the same time and from many different sources at once.

For instance, if your playing a AR game, why shouldn’t your chat window be viewable at the same time?

If you have skinned your environment with a custom view of the world, why shouldn’t you also see mapping or restaurant recommendations?

The ways to present these layers of data and toggle them on/off in the most intuitive and flexible ways would be a task for the client markers, and I’m sure we will see many innovations in those areas.

But by using Wave it at least provides the framework for having multiple information sources controlled by many different people yet accessible, and user-submittable, via the same protocol.

Who?

This idea first sprouted from a paper I route focusing on the potential for IRC to be used for AR;

http://www.lostagain.nl/testSite/projects/Arn/AR_paper.pdf

I suggested near the end Wave might be a better alternative (using Google Wave was an idea Tish Shute, Ugotrade, brought up in response to the Arn prototype design on IRC), and it quickly became apparent that Wave was a very suitable medium.

Since then, there was a lot of interest, and numerous people have offered to help.

In particular, recently, the PygoWave team is helping us out, as they have an existing server supporting c/s protocol, which is currently being actively developed.

Where?

You can join the general discussion here;

Augmented Reality Wave Development

Technical side here;

Augmented Reality Wave Framework Development

When?

There’s lots still to do, and we are at an early stage.

Our current targets: (last updated 11/12/2009)

- Getting reading/writing of prototype ARBlips to the PygoWave sever. (the PygoWave team have already made a standalone client and have the protocol for this sorted!)

- Establishing a minimal spec for ARBlips to be later expanded.

- Writing a very simple prototype online client showing how to store/retrieve the data.

- Expanding client to work for some use-cases.

- Establish a logo/branding for the project.

Other FAQs.

Where’s the catch?

While we believe Wave is highly suitable for development, it has the drawbacks of being a new system with just a few servers worldwide, which (at the time of writing this), have not yet been federated together yet.

Naturally, as a new technology, its likely to have some growing pains. And building a new technology on other new technology will multiply that somewhat. The first pain is the lack of a standard client / sever protocol. PygoWave have stepped in to the rescue a bit here, by being not just one of the most developed Wave server other then Google, but also leaping ahead with support for Json based c/s interaction. Google has stated they want community to take the lead on on a c/s protocol, so we are hoping they will adopt a Json variant, or a XMPP one and add it to the spec. We hope in much the same way as POP3/IMAP have been a standard for email server interaction, a similar one will develop for Wave.

In the meantime we plan to keep the code for writing ARBlips somewhat abstracted so as to make it easy to adapt in future.

As for the newness of Wave and other potential problems it will bring, we aren’t that worried as its built on XMPP, which has proved reliable already.

The other catch is we are unfunded, which slows development down considerable as we have to fit it around our other jobs.

I’m making my own AR Browser, and am slightly interested in maybe supporting you.

We are naturally very keen for support, and particularly for those with skills and visions to give feedback on the proposed protocol. Specifically: what do you want stored in a blip?

That’s what’s important at this stage.

We don’t see the Arn as a replacement for existing browser systems at the moment. We don’t want to restrict innovation or development in this fast developing market as we are very impressed at what’s been achieved so far. In many ways our task is small in comparison to what’s already accomplished.

However, we do believe the Arn will make a good addition to existing browser systems. It will allow users contribute data and have social features without having to worry about accounts or hosting.

It will still be quite some work to support; new GUIs will need to be developed to make it easy to submit data from the devices, as well as to login to waves.

However, we hope over time to build a set of example libs to make the read/writing of ARBlips as as easy as possible to implement in your software.

Perhaps a good way to think about it is existing AR Browsers are like word-processors, supporting the Arn will be like adding support for *.txt, but doesn’t limit what you can do with your own format.

Eventually we do hope ARBlips hosted on Wave will become the majority of AR data, and its functionality will be analogous to the internet is today. We truly believe in the long run a standard is essential.

But for now we think merely getting a baseline format established for how AR data can be communicated will increase user-ability, usefulness, and help the market grow.

Can I help?

Sure.

We particularly need people with technical skills in relevant fields. (both gwt/javascript web programming and c++(/qt)standalone programming help very welcome!).

But we also welcome people just with vision to help focus use-cases and to conceptualise what we want to be able to do with the system.

Please either join the relevant AR Waves or Wiki

We are especially interested in those with JSON and Comet experience. Specifically those with the abilities to make standalone applications to read/write to a sever using these methods.

What type of data will a AR Blip store?

This is still actively being decided, but essentially its a physical hyperlink.

A connection between a physical location (or object, see below) and a piece of data.

Specifically, we are thinking about the following fields;

Location in X,Y,Z,

Coordinate System used for the above,

Orientation,

MIMEType [the type of data stored]

DataItself [either a http link for 3d meshs and other larger data, or an inline text string if its just a comment]

DataUpdateTimestamp [so clients know if its necessary redownload]

Editors [the user/s that edited/created this blip]

ReferanceLink [data needed to tie the object at a non-fixed location, such as an image to align it to an object in realtime],

Metatags [to describe the data]

Are you purely tying stuff to fixed geolocations?

Certainly not ![]()

As part of of the spec we wish to be able for people to be able to link data to dynamically moving objects, trackable by image or other methods.

The idea being that one day someone could link a piece of text or 3d mesh to an image on a t-shirt they are wearing, or perhaps link a dynamically updating twitter feed, or perhaps provide information on a product (based on its logo).

There’s a large number of possibility’s for image-based linking alone, and that’s not even considering possibilities like linking RFIDs, or other forms of less precise but invisible binding data.

We need a lot of feedback from those companies already doing markless tracking. What types of images do you need, idly to link a mesh to an object? is one enough?

Summary of AR Wave Work to Date

Purpose: To provide an open, distributed, and universally accessible platform for augmented reality. To allow the creation of augmented reality content to be as simple as making an html page, or contributing to a wiki.

Specific Goal: To establish a method for geolocating digital data in physical space (or linking it to physical objects) using wave as a platform.

(For justification as to why we are using Wave see: our faq )

Wave as a platform

We are developing on the PyGoWave server at the moment but the goal is to be compatible with all Wave servers

PyGoWave has already achieved an important aspect in enabling the project in being a waveserver with a working and well documented server protocol. This allows both standalone and webbased clients to interface with it already. See - The PyGoWave Qt-Based Desktop Client

This is one of the reasons why we have chosen to develop for the Pygo server at this stage.

However, the overall goal of AR Wave is to have a framework compatible with all servers using the Wave Federation Protocol. As more wave servers get c/s protocols then ARblips (the data needed to geolocate objects) could be posted and retrieved from various servers using the same client software. For this a standard should emerge. Just as websites don’t have to be hosted on specific servers, neither should AR data need to be hosted on specific wave servers.

In order to reach our goal, there are a few very achievable steps involved – see below.

Feedback

We are still actively seeking feedback, so feel free to join the Wave discussions, and see the history of how the specifications of the protocol evolved. You can also read the justification for some of the choices already made. Note a new discussion for AR DevCamp will be begin at AR Wave: AR DevCamp Session

This will, of course, only be the first draft of the specification, and it is sure to develop much in future.

The important thing now is to make working prototypes while maintaining flexibility.

So what do we need to do?

Steps :

* Establish the overall method – Done.

Each Wave will be a layer on reality which an individual or a group can create. Each Blip in this Wave refers to either a small piece of inline data (like text) or a remote piece of larger data (like a 3D mesh) as well as the data needed to pin-point it in either relative or absolute real space.

We call these blips: ARblips. They are simply blips that stored the data necessary to augment a single object onto a specific bit reality.

It is up to the clients how they interpret and display the data. They could interpret it as a simple 2d list of nearby objects, or as an advanced 3D overlay, whereby multiple waves from different sources could to be viewed at once. What’s important is that there is a standard way to link the digital data to the real world space.

* Establishing the specification for the ARblip – In progress

We have a good idea of what’s needed to be stored in an ARblip, and we have hammered out a rough format.

The data might be stored as blip-annotations, but this has yet to be finalised.

A rough outline of the type of data stored can be seen in this c++/qt header for ARblip data can be seen at the end of this document.

* Storing and retrieving these pieces of ARblip data on the PyGo server – In progress.

The Pygowave team has made some excellent libraries that should make reading and writing data on the PyGoWave server very trivial for those with c++ skills.

This, however, is a real critical step, so more developers with C++ skills are very welcome!

* Making the above client mobile, and using a devices gps device to place the data. – Not started.

The next step would be to port the code to a mobile phone and use it’s gps-input to post geolocated data and view what others have posted. This would be a fairly simple and not to useful app in itself. However, it would mark the first time anyone could post AR data and anyone could view it, all using open-source infrastructure.

As a bonus, because we are using wave infrastructure, the updates to any ARblip should appear in near realtime.

* To continue with the proof of concept, we would like to have simultaneous wave input from a PC

and mobile phone at the same time. – Not started.

For example, someone could post a pin on Google maps API and have that data posted to a ARBlip in a wave. Someone logged into that wave on their mobile device would then see the data posted appear.

More so we hope that when the Google map pin is dragged about, the mobile phone viewer, with just a few seconds lag, will see its location updated in real time.

We hope to make a modest yet practical app at this stage.

* After all this, we can go onto the interesting things:

3D data, camera-overlays, data fixed to objects and many more. There’s plenty of existing software using these features (such as Wikitude, Layer) and some that are even open source software (like Gamaray and Flashkit). The open source code can give us a leg-up. However, we prefer to establish the protocol first. So naturally, these fancy features aren’t a priority for us. Rather we think our energy is better spent establishing the protocols and infrastructure so that other people can build more advanced bit of software easier.

However, once our primary goals are established, we will look to make a open source augmented reality browser ourself which will surely feature many of these features.

Overall, we hope once we have a simple proof of concept, there will be many groups, both existing and new, wanting to use this Wave system for their own apps, games and data.

Conclusion:

Really it’s now all about growing the community. We hope as soon as we show how great Wave can be for augmented reality, that lots of individuals and teams will start making their own clients to read/write geolocated data.

Overall we don’t think anything we make will be that impressive in itself. That’s not our goal.

We instead hope that our project will enable AR-content to be made as easily as web content. That games, information and apps will be able to be created without the creators having to worry

about the infrastructure behind it.

Technical information -

Current ARBlip header file

(below is a c++/qt header file for an ARBlip object that should illustrate the data being stored)

class arblip

{

public:

arblip();

~arblip();

arblip(QString,QString,double,double,double,int,int,int,QString);

QString getDataAsString();

QString getEditors();

QString getRefID();

QString getXAsString();

QString getYAsString();

QString getZAsString();Â bool isFaceingSprite();Â

private:

//ID reference. This would be a unique identifier for the blip. Presumably the same as Wave uses itself.

QString ReferanceID;

//Last editor(s)

QString Editors;

int PermissionFlags = 68356; Â // default 664 octal = rw-rw-r–

//Location

double Xpos;Â Â // left/right

double Ypos;Â Â // up/down

double Zpos;Â // front/back

//Orientation

// names, ranges and directions are taken from aeronautics.

// If no orientation is specified, it’s assumed to be a facing sprite.

// Roll: rotation around the front to back (z) axis. (Lean left or right.)

// range +/- 180 degrees with + values moving the objects right side down.

int Roll;

// Pitch: rotation around the left to right (x) axis. (tilt up or down)

// Range +/- 90 degrees with + values moving the objects front up. (looking up)

int Pitch;

// Yaw: rotation around the vertical (y) axis. (turn left or right.)

// range +/- 180 degrees with + values moving the objects face to its right.

int Yaw;

bool FacingSprite; //if no rotation specified, this should default to true

//if set to true when a rotation is set, then it keeps that rotation relative to the viewer

//not relative to the earth.

//Data format

QString DataMIME;

QString CordinateSystemUsed; //The co-ordinate system used. This should be a string representing a Open Geospatial Consortium standard. This could be earth-relative for gps co-ordinates, or in some cases relative to the viewer, for data to be displayed in a HUD like style.

//Data itself

QString Data;

QString DataUpdatedTimestamp; //Time the Data was updated changed

//Note; A seperate timestamp should be used for updates that dont effect the data itself.

//(such as if a 3d object moves, but its mesh isnt changed)

//Data metadata QMap<QString, QString> Metadata;

};

0 Comments For This Post

3 Trackbacks For This Post

December 6th, 2009 at 12:46 pm

[...] The first AR DevCamp was held yesterday. Thomas Wrobel (can I say our very own Thomas Wrobel?) had an FAQ prepared for the occasion, about the AR wave initiative. [...]

December 28th, 2009 at 8:25 am

[...] notable things that happened in December – AR Wave FAQ on UgoTrade, Enkin acquired by Google, Wikitude Teams Up with Lonely Planet, 10 Worse Uses of AR in 2009, [...]

January 19th, 2010 at 9:17 am

[...] Protocol – AR Wave a.k.a Muku – “crest of a wave” (see my posts here, here and here for more on this project, and the AR Wave Wiki here). Federation is, I believe, one [...]