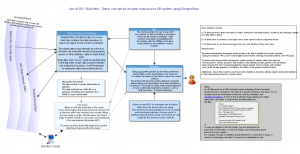

It is now nearly two weeks since the Google Wave preview launch and I am happy to say we have some AR Wave news. The diagram above shows Thomas Wrobel’s basic concept for a distributed, multi-user, open augmented reality framework based on the Google Wave Federation Protocol and servers (click on the image to see the dynamic annotated sketch or here).

Even in the short time we have had to explore Wave, some very exciting possibilities are becoming clear. Thomas puts some of the virtues of Wave as an AR enabler succinctly when he writes:

“Wave allows the advantages of both real-time communication, as well as the advantages of persistent hosting of data. It is both like IRC, and like a Wiki. It allows anyone to create a Wave, and share it with anyone else. It allows Waves to be edited at the same time by many people, or used as a private reference for just one person.

These are all incredibly useful properties for any AR experience, more so Wave is open. Anyone can make a server or client for Wave. Better yet, these servers will exchange data with each other, providing a seamless world for the user…..a single login will let you browse the whole world of public waves, regardless of who’s providing or hosting the data. Wave is also quite scalable and secure…data is only exchanged when necessary, and will stay local if no one else needs to view it.

Wave allows bots to run on it…allowing blips in a waves to be automatically updated, created or destroyed based on any criteria the coders choose. Wave even allows the playback of all edits since the wave was created.

For all these reasons and more, Wave makes a great platform for AR.â€

There will be much more coming soon on Wave enabled AR because the Google Wave invites have begun to flow out to a wider community now. This week, many of our small ad-hoc group looking at the development challenges and implications of Google Wave for AR actually got into Wave for the first time.

Many thanks to all the people who have contributed to this discussion so far including: Thomas Wrobel, Thomas K. Carpenter, Jeremy Hight, Joe Lamantia, Clayton Lilly, Gene Becker and many others.

We will be setting up some public AR Framework Development Waves this week. If you have any trouble finding them, or adding yourself to it, please add Thomas and I to your contact list. I am tishshute@googlewave.com Thomas is darkflame@googlewave.com The first two are currently called:

AR Wave: Augmented Reality Wave Framework Development (developer forum)

AR Wave: Augmented Reality Wave Development (for general discussion)

The discussion so far has been in two areas. On the one hand, it is gear-heady and focused on the Google Wave Federation Protocol, code, development challenges, and interfacing to mobile, while on the other hand people have been looking at use cases and questions of user experience.

Distributed, “shared augmented realities,†or “social augmented experiences” – that not only allow mashups, & multisource data flows, but dynamic overlays (not limited to 3d), created by users, linked to location/place/time, and distributed to other users who wish to engage with the experience by viewing and co-creating elements for their own goals and benefit – are something very new for us to think about.

As, Joe Lamantia, puts it, now:

“there’s a feedback loop between which interactions are made easy by any given combo of device;/ hardware / software / connectivity, and the ways that people really work in real life (without any mediation / permeation by tech).â€

Joe Lamantia whose term, “social augmented experiences†I borrow for this post title, has done some thinking about “concepts and models for understanding and contributing to shared augmented experiences, such as the social scales for interaction, and the challenges attendant to designing such interactions.†Check out Joe Lamantia’s blog for more on this later this week.

It is very helpful, as Joe points out, to shift the focus back and forth between the experience and the medium.

It is super exciting to have clear evidence that shared augmented realities are no longer merely possible, but highly probable and actually do-able now.

I should be absolutely clear about what Google Wave does to enable AR because obviously Wave plays no role in solving image recognition and tracking/registrations issues. But, for example, Wave protocols and servers do provide a means to exchange, edit, and read data, and that enables distributed, social augmented realities.

Thomas explains how the newly named “AR Blip” works as:

“An AR Blip is simply a Blip in wave containing AR data. Typically this would be the positional and url data telling a AR browser to position a 3d object at a location in space.

In more generic terms, an AR Blip allows data of various forms (meshes,text,sound) to be given a real-world position.”

I have mentioned in other posts (here and here) that Wave can be used for AR as precise or as loose as the current generation devices can handle. And as the hardware and software for the kind of AR that can put media out in the world to truly immerse you in a mixed space, the framework should be able to handle this too.

(a note on the Wave playback feature – this opens up a whole new world of possibilities. Check out this post on some of the implications of playback for writing!)

The use cases we have been coming up with are too numerous to go into in detail this post. The open nature of an AR framework/Wave standard will lead to many new applications we have barely begun to imagine. As Thomas points out, different client software can be made for browsing, potentially allowing for various specialist browsers, as well as more generic ones for typical use. The multitudes of different kinds of data in/output that could be integrated in an open AR framework as it evolves are mind boggling.

But, for now, some obvious use cases do come to mind:

eg.

- Historical environmental overlays showing how a city used to be/and how this vision may be constructed differently by different communities

- Proposed building work showing future changes to a structure/and the negotiations of this future (both the public and professionals could submit their own comments to the plans in context), seeing pipes, cables and other invisible elements that can help builders and engineers collaborate and do their work.

- Skinning the world with interactive fantasies

I asked Thomas to help people understand how Wave enables new interactions to data by explaining how Wave could enable citi sensing and citizen sensing projects (e.g. this one being pioneered by Griswold):

“Sensors, both mobile and static could contribute environmental data into city overlays;

Having these invisible aspects of the world made visible would create ways to improve sustainability, social equity, urban management, energy efficiency, public health, and allow communities to understand and become active participants in the ecosystems and infrastructure of their neighborhoods.

The key is reflecting this kind of data back to people “making it not back story but fore story,” right where we are, right where it happens, as well as having it available for analysis.

As well as creating new opportunities to interact/respond to/and enhance data, making visible the invisible as Natalie Jeremijenko’s work on Amphibious Architecture and Usman Haque’s project Natural Fuse shows, can also create new connections/understandings between humans and the non human’s that share our world, e.g. fish, plants, waterways.

At a more prosaic level potential buyers of property could see more clearly what they are buying, city planners could see better what needs to be worked on, and environmental researchers could see more clearly the impact people are having on an area.

Also Wave can provide some of the framework necessary to begin to begin to address tricky problems of privacy. Sensitive data can be stored on private waves, e.g. medical data for doctors and researchers, but the analysis of the data could still be of benefit to all, e.g., if it’s tied disease occurrences to locations and relationships between the environmental data and health were…quite literally…made visible.

“The publication of energy consumption and making it visible as overlays, could help influence the public into supporting more energy efficiency companies and businesses. It could also help citizens to try to keep their own energy usage down, to try to keep their street in “the green.â€

Thomas notes:

“With all of the above, it becomes fairly trivial to write persistent Wave-bots that automatically send notice when certain criteria are met (pollutants over a certain level, for example). On publicly readable waves, anyone can use the data in their local computers, process it, and contribute results back on a new wave. Alternatively, persistent remote severs could run Cron jobs, or other automated processing, using services such as App Engine to run wave robots.

All these possibilities become “free†when using Wave as a platform for geographically tied data.”

But of course this is just the beginning!

Recently, I talked at length with Jeremy Hight who has been thinking about, designing and creating shared augmented realities, that anticipate the kind of dynamic, real time, large scale architecture we now have available through Wave, for quite some time now.  This is exciting stuff.

Modulated Mapping: Talking with Jeremy Hight about Layers, Channels and Social Augmented Experiences

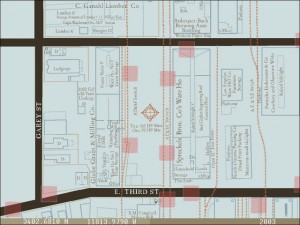

image from Volume Magazine (Hight/Wehby)

Tish Shute: I know you have been involved in locative media from its early days. Perhaps we can talk about how AR continues the locative media journey?

Blair MacIntyre gave me this distinction, recently: “AR is about systems that put media out in the world, and immerse you in a mixed space. Â Even the current “not really registered” mobile phone AR systems are still “sort of” AR (e.g., Layar, etc).

Locative media/ubicomp/etc are very different, in that they tend to display media on a device (phone screen) that is relevant to your context, but does not attempt to merge it with the world.

The difference is significant, and making it clear helps people think about what they do and what they want to do, with their work. The locative media space though points toward future AR systems (when the technology catches up!).”

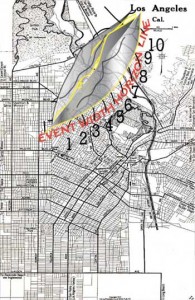

Jeremy Hight: The need is to finish the arc that locative media and early AR have started and to now truly return to the map itself, but as an internet of data, interactivity, channels of data , end user options like analog machines once were but in high end tools, a smart AI-ish ability for it to cull data for the user, and to allow social networking to be in real world places on the map both in building augmentation and in using and appreciating it..not hacks..which have their place…but a rhizome, a branched system with shared root,end user adjustable and variable..this is the key.

This takes AR and mapping and makes a possible world of channels in space and this eventually can be a kind of net we see in our field of vision with a selected percentage of visual field and placement so a geo-spatial net, a local to world wide fusion of lm into a tool and educational tool

VR[virtual reality] has greatly advanced, but in nodes as it has limitations…LM [locative media] is the same…AR [augmented reality] is the way.. it now has locative elements and aspects of VR integrated into its functionality and nodes…it is the best option with all of these elements, greater hybridity and data level potential a well as end user and community sourcing potential

I wrote an essay for Archis’ Volume, the architecture magazine on a near future sense of some of this….a visual net on the lens like ar but with smart objects and social networking and dissent.

I also wrote of these things for immersive graphic design, spatially aware museum augmentation, education through ar and lm and nod to the base interface of eye to cerebral cortex in layered and malleable augmentation in my essay “Immersive Sight” a few years back

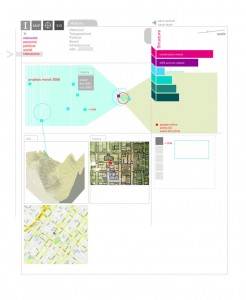

image [above] is simple illustration of a possible example on a screen or in front of eye where in a mondrian show..the graphic design of information actually builds as one moves

(key is calibrated spatial intervals and related layers of further augmentation which is logical due to location and proximity)

from immersive sight on immersive graphic design: “The design can work with this in a way that creates an interactive supplemental set of information that is malleable, shifts based on location, builds and peels away as one moves closer to a work and plays with the forms of the works and the elements of the space itself. The sequence can contain many different elements and their interplay (both in the field of vision and in terms of context and layers of information). This is the model of sections of augmentation turning on and off at key points as individual spatial and concepts moments and nodes.

Another interesting possibility is that individual points of augmentation don’t turn off, but instead are designed to build as one moves in a direction toward a specific part of the exhibit. The design can work in a sequence both content wise and visually in terms of a delay powered compositional development and style in which each discreet layer of text and image does not fade out, but builds on each other into a final composition. This can form paintings similar to Mondrian perhaps if it is a show of similar works of that era or it can form something much more metaphorical and open interpretation of the space and content but utilizing a sense of emergence spatially in terms of the composition (pieces laid bare until final approach for effect).

Each section will be well designed, but they build in layers as one moves until finally forming the final composition both visually and in terms of scope of information or building immediacy. The effect can be akin to taking a painting and slicing it into onion skin layers laid out in the air at intervals, each the same dimensions, but only one section compositionally of the greater whole. This has many semiotic applications beyond its potential aesthetically and as spatialized information possessing a sense of inter-relationship as one moves.“

Tish Shute: One of the things I found very inspiring when I read your papers was that your ideas are not all dependent on a model of AR that would necessarily require goggles, back packs and lots of CPU/GPU – not that that wouldn’t be nice, but that even using “magic lens” AR of the kind smart phones has enabled in an open distributed framework would open up a lot of new possibilities for what you call modulated mapping wouldn’t it? What kind of social augmented realities might be enabled by a distributed infrastructure like this [AR Wave]?

Jeremy Hight: right….I see that as wayyy down the road…most important is the one you talk about as it is more immediate and thus more essential and needed. Eventually the goggles will be like a contact lens and a deep immersive ar version of this will come, that to me is certain, but a ways down the road. An incredible amount is possible now, and this is a more pragmatic move as opposed to the more theoretical of what is a few steps from here. Thus it is more important and essential now. Tools like Google Wave are taking what even 2 years ago was more theoretical discussions of what may be and instead introducing key elements to a more immediate, powerful, flexible level of augmentation. What have been hacks and isolated elements are to be integrated and social networking, task completion, shared tools and graphics building and geo-location.

Tish Shute: I think some people question what augmented reality has to bring to the continuum of location based experiences that other forms of interface/mapping do not?

Jeremy Hight: right….and the schism between its commercial flat self and tests with physics etc and in between …there are a lot of unfortunate assumptions it seems as to where ar and lm cross and how ar can be many things beyond deep immersion or the opposite pole of a hockey puck having a magic purple line etc….like lm is seen as either car directions or situationist experiments with deep data…..the progression to me is deeply organic….and now augmentation can be more malleable, variable and end user controlled.

Tish Shute: Yes, it is really exciting time for AR. Historically AR research has gone after the hard problems of image recognition, tracking and registration because we have had available to us these dynamic, real time, large scale architectures like Wave available (until now!), so less work has been done on exploring the possibilities for distributed AR fully integrated with the internet and WWW hasn’t it?

A distributed augmented reality framework such as we have envisaged on Wave would allow people to see many layers from many different people at the same time. ‬And this kind of model has been part of your thinking and fundamental to your work for a while, hasn’t it? But it is a very new idea to most people to think about collaboratively editing layers on the world, and to be able to view augmented space through channels and networked communities? Could you explain some of the ways you have explored these ideas and how they could be explored further now to create meaningful experiences for people?

Jeremy Hight: right..exactly…modulated mapping to me can be an amazing tool for students…back end searching data visualizations and augmentations based on their needs…while they do something else on their computer or iphone…that can be amazing..and not deep immersive..The map can be active, malleable, open source fed, and even, in a sense, intelligent and able to adapt. The possibility also exists for this map to have a function that based on key words will search databases on-line to find maps, animations, histories and stories etc to place within it for your study and engagement. The map is thus a platform and yet is active. Community is possible as people can communicate graphically in works placed on the map and in building mode in the tool. All the tropes of locative media are to be in a mapping system of channels of augmentation and a spatial net. The software by design will allow development on the map and communication like programs such as second life but in mapping itself.

image from Parsons Journal of Information Mapping Volume 2 (Hight/Wehby)

I wrote an essay a few years ago for the Sarai reader questioning the traditional map and its semiotics and need to reconsider – then did work looking into it and what those dynamics were and they got into 2 group shows in museums in Russia…so it actually was my arc toward modulated mapping…an interesting way to it! But yes the map itself..this is a huge area of potential and non screen based alone navigation etc. I see now that my 2 dozen or so essays in lm,ar, interface design and augmentation have all also been leading in this direction for about 10 years now

Tish Shute: IÂ love immersive visualization but can we “return to the map – the internet of data” as you mentioned earlier and produce interesting augmentation experiences that go beyond locative media’s device display mode without having the goggles, for example, through the magic lens of or smart phones?

Jeremy Hight: yes, absolutely. the map in the older paradigm is an artifice born often of war and border dispute and not of the earth itself and its processes…the new mapping like google maps is malleable, can be open source, can read spaces and can be layers of info in the related space not plucked from it as in the past..this is amazing. the old map also was born of false semiotics/semantics like “discovery of new lands” or ” pioneer” while the places were there already and names often were of empire…now this is no longer the case

Tish Shute: So geoAR is an a better way to express a new social relationship to mapping? And how does this fit into the evolving arc of locative media that evolves into augmented reality?

Jeremy Hight:…early lm was mostly geocaching and drawing with gps..it took new paradigms to invigorate the field a lot of folks focus on tools and what already is, cross pollination can ground ideas that are more radical…a metaphor in a sense to place what can be in a familiar context.

Tish Shute: one of the great disappointments in VR has been its isolation from networked computing and also, up to now, augmented reality – to achieve an immersive experience with tight registration of media/graphics have to create separate system isolated from the internet and power of the web.

Jeremy Hight: yes….this will change. vr is to me an island but ar takes a part of it and shifts the paradigm and new things open this way. Do you know the project “life clipper”? friends of mine..doing interesting things..they are a clear bridge betwen lm and ar….and from vr

in ar augmentation and what is being augmented become fused or in collision or in complex interactions as a means to a larger contextualization and exploration of what is being augmented..this is true in immersive or non ar….huge potential

vr is a space, now can be surgery which is amazing. but not layered interaction, thus an island and graphic iconography on a location can use symbolic icons which opens up even more layers (graphic designer/information designer in me talking there I suppose..)

Tish Shute: Yes ! talk to me more about layers and channels I think this is one of the most interesting questions for me in augmented reality at the moment – what can we do with layers and channels and the new possibilities on connections between people and environments that these can create?

The ability for anyone to post something is critical to the distributed idea but one of the reasons I am so excited by Google Wave is I am fascinated by the playback function. How do you think this will enable new forms of collaborative locative narratives (nice post on Wave playback here ).

Jeremy Hight: We are in an age of cartographic awareness unseen in hundreds of years. When was the last time that new mapping tools were sold in chain stores and installed in most vehicles? When was the last time that also the augmentation of maps was done by millions (Google map hacks, etc)? The ubiquitous gps maps run in automobiles while people post pictures and graphic pins to denote specific places on on-line maps.

The need is for a tool that combines all of these new elements into an open source, intuitive layered and rhizomatic map that is porous (like pumice, organic in form yet with “breathing room†),ventilated (i.e: adjustable, a flow in and out), and open (open source,open access,open spatialized dialog).

I wrote of this in my essay “Revising the Map: Modulated Mapping and the Spatial Interface .”( http://piim.newschool.edu/journal/issues/2009/02/pdfs/ParsonsJournalForInformationMapping_Hight-Jeremy.pdf )

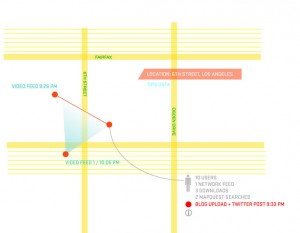

image from Parsons Journal of Information Mapping (Hight/Wehby)

Tish Shute: One mapping project I really like is Mannahatta. How could distributed AR contribute to a project like Mannahatta?

Jeremy Hight: that is a good example..imagine taking manhattan and having channels of options to overlay, that being an excellent option, and imagine being able to even run a few at once with deliniating icons..you can augment a space with history, data, erasure, narrative, scientific analysis, time line of architecture, infrastructure, archaeological record etc….endless possibilities, and this agitates place and place on a map into an active field of information with end user control…and open options for new layers

Tish Shute: and do you think we could do interesting things with AR on a project like Mannahatta even with the current mediating devices we have available – i.e. our smart phones as obviously the rich pc experience of Mannhatta has built for it’s web interface would not be available as AR at this point?

Jeremy Hight: yes….k.i.s.s right?  these projects do not have to only be immersive and graphic intensive……take how people upload photos onto google maps….just make that on a menu of options, there are some pretty cool hacks already..

…options is key, a space can have a community as well, building on it in software, and others navigating it, i see it near future and down the road..always have with ar really

image from Volume Magazine (Hight/Wehby)

Jeremy Hight: and yes, a lot of people focus on ar as its limitations and processing power needs as a major road block

Tish Shute: so do you see AR on smart phones adding any value to a project like Mannahatta?

Jeremy Hight: yes…that it can be integrated into other similar works and even disparate but cloud linked ones…so a place can be “read” in diff ways on the iphone….beyond its map location, and more can be possible if you are there…others away, so it becomes channels of augmentation

Tish Shute: AR like locative media puts who you are, where you are, what you are doing, what is around you center stage in online experience but it also “puts media out in the world” – people I think understand this well as a single user experience but we are only just beginning to think about how this will manifest as a social experience – could explain more about modulated mapping as an experience of social augmentation?

Jeremy Hight: Modulated Mapping is a tool that will allow channels to be run along the map itself. This will allow one to view different icons and augmentations both as systems on the map and in deeper layers of information (photos, videos, animations, visualizations, etc) that can be turned on and off as desired. The different layers of icons and data may be history, dissent, artworks, spatialized narratives, and annotations developed that are communally based on shared interests, placed spatially and far beyond. The use of chat functionality in text or audio will be open in building mode and in mapping navigation/usage as desired. This also allows a community to develop or augment in the spaces on the earth. These nodes can be larger and open or small and set by groups in their channel. The end result is an open source sense of mapping that will also have a needed sense of user control as one can select which layers of augmentation they wish to see and interact with at any time. It also will incorporate all the functionality of locative media in mapping software and mapping. In building mode and in map mode, icons will be coded to represent within channels (remember that the person using it has selected channels of augmentation from many based on their current interests and needs). Icons will be coded as active to show work in progress in cities and the globe to both invite participation and to further agitate the map from the sense of the static as action is visible even with its icons as people are working and community is formed in common interest/need .

locative media got a buzz for “reading” places…when I helped create locative narrative that was what blew me away back in 2001…that we could give places a voice by placing data from research and icons on a map……this meant lost history or augmentation was possible as kind of voices of a place and its layers…….I called it “narrative archaeology.” We now have tools that can push these ideas and concepts farther..much farther…and with a range beyond what was before, and then the map was just a tool….but now we are returning to the map itself…..and this as place as much as marker..this is where ar takes the ball to use a bad metaphor

also that project could only work if you came to our spot of a 4 block augmentation and with us there to lend you our gear…we are far beyond that now but it had its place

Tish Shute: How do you see “in context” AR and something we might call “context aware” cloud computing models interacting?

Jeremy Hight: sure…and I must add that I have issues with cloud computing as much as it is a good idea...

Tish Shute: because of loss of autonomy?

Jeremy Hight: tivo is simply a hard drive…but it keyword reads and gives suggestions..that is the is cro magnon link to what can be

Tish Shute: The nice thing about Wave is because of the Federation model, the cloud model and local store ur own data models should work together.

Jeremy Hight: yes..that is better…..loss of autonomy also opens up the arbitrary which is the flaw of search engines as we know it…even Bing fails to me in that sense

Tish Shute: how do you mean, could you explain?

Jeremy Hight: spiders cull from words but cull like trawlers at sea …. tested Bing with very specific requests.. it spat out the same mass of mostly off topic results….

I wonder if there is a way to cull from key words and topics from a user…not Orwellian back end of course…but from their preferences, their searches etc..

Tish Shute: did you see the discussion on search in the AR Framework doc? AR search will be a massively important thing that will take a lot of intelligence and all sorts of algorithm development won’t it?

Jeremy Hight:It also has one area of key functionality that moves into more intuitive software. Upon continued usage, the mapping software will “learn†and search based on key words used and spheres of interest the user is mapping or observing as mapped and will integrate deeper data and types of animations, etc. into the map or will have them waiting to be integrated upon user approval as desired. Over time the level of sophistication of additions and of search intuition will increase dramatically. The search can also, if the user wishes, run in the back end while working in the mapping program, or in off time as selected while doing other tasks. It also can never be used if one is not interested. One of the key elements of this mapping is that it is not composed of a closed set or needs user hacks to augment, but instead is to evolve and deepen by user controls and desired as designed. Pre-existing data,visualizations and augmentations can be integrated with relative ease.

Tish Shute: One of the things that Joe Lamantia points out about social augmented experiences is that they will operate across a number of different scales – conversation > product design & build team > neighborhood / town fixing potholes > global community for causes. How do designs for channels and layers change across these different social scales?

Jeremy Hight: quote myself …”The “frontier” is often defined as the space just ahead of the known edge and limit, and where it may be pushed out deeper into the previously unknown. The frontier in the world of ideas is not the warm comfort of what has been long assimilated; and the frontier in the landscape is not of maps, but of places beyond and before themâ„

The border along what has been claimed is not only that of maps – it is of concepts, functions, inventions and related emergent industries. Ideas and innovations are like the cloud shape that briefly forms around a jet breaking the sound barrier, tangible yet not fully mapped into measure. It is when things are nailed down into specific entities, calibrated and assessed, that the dangers may inflict themselves – greed, competition, imitation, anger, jealously, a provincial sense of ownership either possessed or demanded”. (from essay in Sarai reader). Otherwise channels and augmentation do not have to be socio-economically stratifying or defined by them. We built 34n for almost nothing on older tools.

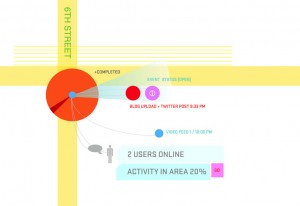

image from 34north 118west (Spellman/Hight/Knowlton)

The ar that is not deep immersion can be more readily available and channels can be what end users need like the diversity of chat rooms or range of Facebook users among us.

I had two moments yesterday that totally fit what we talked about. I went to west hollywood book fair and traditional directions off of mapping for driving directions were wrong and we got lost…our friend could only get a wireless signal to map on itouch and we had to roam neighborhoods then we called a friend who google mapped it and we found we were a block away….so a fast geomapping overlay with an icon for the book fair on some optional grid service or community would have made it immediate. Then at the book fair talked to a small press publisher who is trying to map works about los angeles by los angeles authors on a map..she was stunned when I told her it could be a kind of google map feature option

it also has great potential to publish and place writing and art in places..both for commentary and access. imagine reading joyce in chapters where it was written about and then another similar experience but with writers who published on a service into their city.

Tish Shute: The challenge of shared augmented realities is not just a matter of shipping bits around, but also of how it we will use channels and layars – to create and negotiate different, distributed perspectives, understand a shared common core/or expressions of dissent (this came up in an email conversation with Simon St Laurent).

Jeremy Hight: well my example earlier could have been communal in a way too..a tribe sort of augmentation channeling ….like subscribing to list servs back in the day but of augmentation communities/channels, and for folks to build and use in shared live form, coordinating too

Tish Shute: one good thing though about building an open AR Framework is that as bandwidth/CPU/hardware gets better shared high def immersive experiences could be supported by the same framework..

Jeremy Hight: excellent

Tish Shute: were you thinking of the image recognition and tracking with this example?

Jeremy Hight: yeah….like scanning across a multi channeled google map augmentation with diff icons and their connected data…and poss social networking and fle sharing even in that mode…and rastering etc….could be cool with google wave - on the map..then zooming in a la powers of ten..(eames film).

-I have pictured variations of this for a few years now in my head like the example of my friends and I yesterday…we could have correlated a destination by icons in diff channels..one being lit events within lit channel in l.a map…maybe things streaming on it too…remote info and video etc… that would be awesome

Tish Shute: So many of the ideas in you paper on modulated mapping (see here) are brilliant use cases for shared augmented realities. Perhaps you could talk more your ideas about locative narrative because this is something I think is at the core of the kinds of experiences that a distributed AR Framework would make possible?

Jeremy Hight: on the project “34 north 118 west” we mapped out a 4 block area for augmentation of sound files triggered by latitude and longitude on the gps grid and map and the map on the screen had pink rectangles that were the “hot spots” where the augmentation had been placed.

image of interactive map with map based augmentation connected to audio augmentation on site for 34north 118west (Spellman/Hight/Knowlton)

We researched the history of the area and placed moments in time of what had been there at specific locations ….I called this “narrative archaeology” as it allowed places to be “read” by their augmentations…info that was of the place beyond the immediate experience (diff types of info) that otherwise would be lost or only found in books or web sites elsewhere. there now are locative narratives around the world but they need to be linked. from humble origins “narrative archaeology” went on to be recently named of the 4 primary texts in locative media which is pretty amazing to me…but it is growing

- the limitations then were what I called the “bowling alley connundrum” – the specifc data had to reset like pins…..and was isolated….this led me to think about ar back then and up to now. How these could lead to much more from that point, data that would be more layered, variable , fluid..yet still augmented place and sense of place and social networking within data and software

lifeclipper to me is a bridge

Tish Shute: But Life Clipper is isolated from the internet currently is it?

Jeremy Hight: yes…ours was too.. that is what google wave makes possible.. our project only ran on our gear..in 4 blocks…with additional auxiliary info online, and not malleable..but hey 2001 and all..

Tish Shute: so the sites for 34 north 118 west are still active though?

Jeremy Hight: oh yeah!

Tish Shute: nice I really like sound augmentation – have you seen Soundwalk?

Jeremy Hight: yes, very cool.. we chose sound only as it fought the power of image..instead caused a person to be in a sense of two places and times at once

Tish Shute: and in 2001 that was definitely a visionary project!

You must be very excited that finally the pieces are coming together to make this stuff scale!

Jeremy Hight: I can’t even tell you!! it is funny..i have known that this would come..just waited and waited…

..knew it needed the right people and tools..

..so the bowling alley connundrum led me to develop my project shortlisted for the iss (international space station) as I thought a lot about how points and works are not to be isolated…but connected and should be flowing in diff parts of a map….to open up perspective and connected augmentations , but also to think about the map again…not as a base only. then moved into my work with new ways to visualize time and it all really began to gell. The ideas first were published as an essay (http://www.fylkingen.se/hz/n8/hight.html) and later my project blog (http://floatingpointsspace.blogspot.com/)

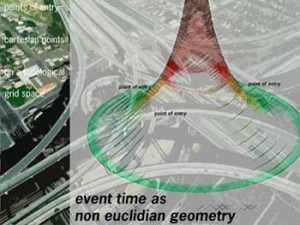

Tish Shute: One thing I noticed when I was reading your paper is how you have been exploring non-euclidian geometries. Could you explain how this is part of your idea of modulated mapping?

Jeremy Hight: Yes, this first came to me when my wife was reading to me from a book on the Poincare Conjecture and I was hit with a new way to measure events in time and after months of sketches, schematics and research came to see how it could also be connected to a geo-spatial web of projects and augmentations. It was published in the inaugural issue of Parsons School of Design’s Journal of Information Mapping which was an exciting fit. I call it “Immersive Event Time”(http://piim.newschool.edu/journal/issues/2009/01/pdfs/ParsonsJournalForInformationMapping_Hight-Jeremy.pdf)

so the last 3 years I have been working on how it could all work as channels of augmentation, and building and navigation as open and community in a sense as well as ai capability that was the time work especially. how time as experienced within an event is not a time “line” but points on and within a form….and how this model is better for visualizing events in time and documenting them. it actually sprang form reading a book on the poincare conjecture, popped a bunch of other stuff together so one could visualize an event in time as like being in the belly of a whale..with time as the ribs..and our measure of time as the skin…and moving within it….hoping this will be used as educational tool

and this also can be tied to ar and map again…how documentation of important events can be kept within icons on a google map..then download varying visualizations based on bandwidth and desired format

Tish Shute: I have been thinking about is the new forms of social interaction/agency that these kinds of augmentations of space/place/time will create. it seems there are two poles – one is the area Natalie Jeremijenko explores of shifting social relations from institutions/statistics to real time/location based/interactions and new forms of social agency. The other pole completely is more like the cloud based AI and perhaps crowd sourced machine learning.

Your ideas explore the possibilities of both these poles. And certainly one of the big deals of distributed AR integrated with would be the possibilities it opened up both for new forms of networked social relationships and for new ways to draw on network effects.

Jeremy Hight: and cross pollinations within …that is what my mind goes to

Tish Shute: The other night I met Assaf Biderman, MIT, from the Trash Track team. Trash Track doesn’t utilize AR but I could see that there are possibilites there.

What do you think?

Jeremy Hight: yes, absolutely, there can sort of skins on locations that user end selection can yield …like channels of place….and can range from pragmatic core to art and play and places between….how this recalibrates the semiotics of map…more than just augmentation as seen as a kind of piggy back on map..map becomes interface and defanged platform if you wil, interestingly my more poetic/philosophic writing led me here too

Tish Shute: I know they are at very different poles of the system but I do wonder how AR can bring some of the level of social agency/interaction that Natalie Jeremijenko works on into a productive interaction with the kind of innovations in Machine learning that Dolores Lab style machine learning!!and others are pioneering?

Jeremy Hight: Natalie’s genius to me is in practical functional tech that also opens deeper questions and even new openings of what is needed..amazing layers in her work that way.. succint yet deep..very deep

Tish Shute: Yes – I a just writing a post about her work – I find it deeply moving the way she has delved into the possibilities to using technology to open us up to our world. One of the reasons I find distributed AR so interesting is because it will make it possible for all kinds of people to create and use augmentation in their lives and communities.

So to return to how a distributed AR framework could contribute to a project like Trash Track?

Jeremy Hight: what about using it for community, dissent and awareness raising then? like Natalie’s work but building like a communal work of multiple points, like the old adage of the elephant and the blind men sorry..metaphor – like one of my points in immersive sight was how one could take augmentation as multiple works sort of turning the faces of a thing or place…and how this would make a larger work even in such a flow so people moving in a space could also build..

what of ar traces left as people move calibrated to user traffic and trash as estimated in an urban space…like it goes back to chris burden in the 70′s making you know that as you turn the turnstyle you are drilling into the foundation and may be the one that collapses the building?

so their movements leave trash. Natalie is all about raising awareness to cause and effect and data , space and ecology. love that. so maybe …

a feedback loop , artifact and user end responsibility can leave traces …trash…

.. cybernetics vs ecology and human waste

Tish Shute: could you elaborate?

Jeremy Hight: brain fart…that the mass of trash people leave is a piece at a tiime….and how like the space shuttle mission when it was argued first true cybernaut occured….one cord to air for astronaut..one for computer on their back to fix broken bay arm…if there is a way to build on that and in relation to the topic…..how this can go further, that machines do not waste as much…as ar is a means to cybernetic raise awareness..eh..In a sense it is like the space shuttle mission when arguably the first true cybernaut occurred….one cord to air for astronaut..one for computer on their back to fix broken bay arm…if there is a way to build on that and in relation to the topic…..how this can go further, that machines do not waste as much…as ar is a means to cybernetic raise awareness..eh.. hmmm... sensors etc…wearables too – could be eco awareness with data and machine and human

what about a cloud computing system with a slight ai in the sense of intuitive word cloud and interest scans…..so as one moves through say new york they can be offered new ai data and services as they move ? could also be of eco interests? concerns about urban farming, eco waste, air pollution etc….perhaps with (jeremijenko element here)  sensors placed in locations and these also giving data reads in public areas  with no input but hard data itself……hmm..could be interesting

it can also give info of the carbon footprints (estimated prob unless data is public record somehow) of chain businesses  and data on which are more eco friendly as well as an iconography color coded and icon coded to the best places to go to support greening and eco friendly business?  and the companies could promote themselves on this service to attract eco aware customers who would be seeing them as kindred spirits and helping the

larger effort?

kind of eco mapping..and ar on mobile app

what about sensors that read air pollution levels, levels of solar radiation (to aid with skin protection in shifting light values in a city space..ie put on some skin cream now…), light sensors that detect density and over density in public spaces…to use the old trope in art of reading crowds in a space..but instead could indicate overcrowding, failing infrastructure in public spaces (which is a congestion that leads to greater pollution levels as well as flaws in city planning over time..), and perhaps a tie in to wearables……worn sensors  on smart clothes….this could form a node network of people in the crowds ….and also send data within moving in a space…

here is a kooky thought… what of taking the computing power and data of people moving in a space..and not only get eco data and make available to them levels of

data..but make possibly a roving super computer…crunching the deeper data of people open to this……a hive crunching deeper analysis of the space, scan properties from sensors, and even a game theory esque algorithm of meta data if say 40 people out of 50 hit on a certain spike or reading…and even their input…..I worked in game theory for paleontology in this manner for a time as a teen….a private project…… Â the reading can lead to a sort of meta read by what hits most consistently..as well as in their input..text of what they experienced, observed,postulated,analyzed even…. this could be really interesting…even if just the last part from collected data and not from any complex branching of servers..

I thought at 19 or so that the flaw in paleontology was in how so many larger theories were shifting exhibitions and larger senses of things like were there pre-historic birds that were mistaken for amphibean and then back again….so why not make a computer program and feed all the papers published into it and see what hits were counted in terms of an emerging meta theory…and landscape of key points being agreed upon…this data would be in a sense both algorithmic and a sort of unspoken dialogue …came from a lot of study of game theory one summer…

hope this makes some sense…I forgot to mention that I originally planned to be a research meteorologist and my plan in middle school or so was to get a phd and develop new software to have a global map and then run models of hypothetical storms across it in real time animations of cloud forms, radar and wind analysis/fields, barometric pressure spaghetti charts etc….and to also do 3d cut away models of storm architectures…so been into visualizations of complex data and mapping for a long time!

Tish Shute: Wow let me think about this one!

October 13th, 2009 at 10:11 pm

Great Tish – you’ve definitely dove in deep following our conversations at FooCamp.

There is definitely a technological evolution that is allowing Locative Media hacks from the past decade to be built now with “off the shelf” components – and Wave is an excellent medium in which to carry the information between components. People have been pushing for awhile about how a protocol like XMPP is for “more than chat”, but Google did an superb job building just that demonstration that should enable another leap forward in how we’re interacting with people, interjected with data.

Geospatial and temporal both make necessary filters for us to sift through the myriad of information to get to the contextually relevant, and interesting, bits. We’ve been hacking maps for millenia – from conceptual story mapping, to colloquial mapping in European development and the cartographic renaissance created by the global voyages and rediscovery of Ptolemy’s maps.

It’s definitely a ripe time to figure out how to enable these layers, datasets, and open interchanges and shared augmented, or informed, realities. See you in the wave!

October 13th, 2009 at 10:36 pm

Thank you Andrew! The brilliant geo community I met at Where 2.0, WhereCamp, and FOO Camp have been one of my greatest inspirations. Thank you for the patience and generosity you, Aaron Straup Cope, Anselm Hook, Rich Gibson, Paul Ramsey, Sophia Parafina, Schuyler Erie, Tom Carden, and many others showed in sharing with me the awesome work you have all been doing for quite a while now. I am very excited because I do think as you say “it is definitely a ripe time to figure out how to enable these layers, datasets, and open interchanges and shared augmented, or informed, realities.” And yes, see you in the wave!

December 2nd, 2010 at 1:59 pm

Augmented Reality has been hailed as the next big thing in mobile and home computing. Its quite amazing how many companies and Individual developers are now beginning to design applications to take advantage of these new ideas.